My problem with the IDists’ 500 coins question (if you saw 500 coins lying heads up, would you reject the hypothesis that they were fair coins, and had been fairly tossed?) is not that there is anything wrong with concluding that they were not. Indeed, faced with just 50 coins lying heads up, I’d reject that hypothesis with a great deal of confidence.

It’s the inference from that answer of mine is that if, as a “Darwinist” I am prepared to accept that a pattern can be indicative of something other than “chance” (exemplified by a fairly tossed fair coin) then I must logically also sign on to the idea that an Intelligent Agent (as the alternative to “Chance”) must inferrable from such a pattern.

This, I suggest, is profoundly fallacious.

First of all, it assumes that “Chance” is the “null hypothesis” here. It isn’t. Sure, the null hypothesis (fair coins, fairly tossed) is rejected, and, sure, the hypothesized process (fair coins, fairly tossed) is a stochastic process – in other words, the result of any one toss is unknowable before hand (by definition, otherwise it wouldn’t be “fair”), and both the outcome sequence of 500 tosses and the proportion of heads in the outcome sequence is also unknown. What we do know, however, because of the properties of the fair-coin-fairly-tossed process, is the probability distribution, not only of the proportions of heads that the outcome sequence will have, but also of the distribution of runs-of-heads (or tails, but to keep things simple, I’ll stick with heads).

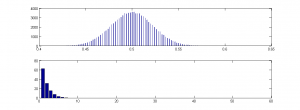

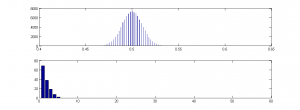

And in fact, I simulated a series of 100,000 such runs (I didn’t go up to the canonical 2^500 runs, for obvious reasons), using MatLab, and here is the outcome:

As you can see from the top plot, the distribution is a beautiful bell curve, and in none of the 100,000 runs do I get anything near even as low as 40% Heads or higher than 60% Heads.

As you can see from the top plot, the distribution is a beautiful bell curve, and in none of the 100,000 runs do I get anything near even as low as 40% Heads or higher than 60% Heads.

Moreover, I also plotted the average length of runs-of-heads – the average is just over 2.5, and the maximum is less than 10, and the frequency distribution is a lovely descending curve (lower plot).

If therefore, I were to be shown a sequence of 500 Heads and Tails, in which the proportion of Heads was:

- less than, say 40%, OR

- greater than, say 60%, OR

- the average length runs-of-heads was a lot more than 2.5, OR

- the distribution of the proportions was not a nice bell curve, OR

- the distribution of the lengths of runs-of-heads was not a nice descending Poisson like the one in lower plot,

I would also reject the null hypothesis that the process that generated the sequence was “fair coins, fairly tossed”. For example:

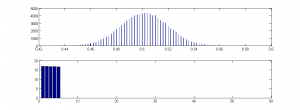

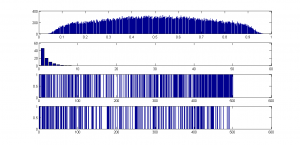

This was another simulation. As you can see, the bell curve is pretty well identical to the first, and the proportions of heads are just as we’d expect from fair coins, fairly tossed – but can we conclude it was the result of “fair coins, fairly tossed”? Well, no. Because look at the lower plot – the mean length of runs of heads is 2.5, as before, but the distribution is very odd. There are no runs of heads longer than 5, and all lengths are of pretty well equal frequency. Here is one of these runs, where 1 stands for Heads and 0 stands for tails:

This was another simulation. As you can see, the bell curve is pretty well identical to the first, and the proportions of heads are just as we’d expect from fair coins, fairly tossed – but can we conclude it was the result of “fair coins, fairly tossed”? Well, no. Because look at the lower plot – the mean length of runs of heads is 2.5, as before, but the distribution is very odd. There are no runs of heads longer than 5, and all lengths are of pretty well equal frequency. Here is one of these runs, where 1 stands for Heads and 0 stands for tails:

1 0 1 1 1 0 0 1 1 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 1 1 1 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 1 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 0 0 1 1 0 1 1 1 1 1 0 0 0 0 1 0 1 1 0 0 0 0 0 1 1 0 0 0 0 1 1 1 1 1 0 0 1 1 1 1 0 0 0 1 1 0 0 0 1 1 0 0 1 1 0 1 1 1 1 1 0 1 1 0 0 0 1 1 1 1 0 1 1 1 1 1 0 0 0 0 0 1 0 1 0 0 1 1 1 1 0 1 1 1 1 0 0 0 0 1 1 0 0 0 0 0 1 1 0 0 0 1 0 1 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 1 1 0 0 0 0 1 1 0 1 0 0 0 1 1 1 0 0 0 0 0 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 0 0 0 1 1 1 0 0 0 0 1 0 0 0 1 1 1 1 1 0 0 0 0 0 1 1 0 0 0 1 1 0 0 0 0 1 1 1 1 1 0 1 0 0 1 1 0 0 0 1 1 0 0 0 0 1 1 0 0 0 1 1 1 0 0 1 1 1 1 0 0 0 0 0 1 1 0 0 1 1 1 1 1 0 0 0 0 1 1 1 0 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 0 0 0 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 1 0 0 1 0 0 0 0 1 1 1 0 0 0 0 0 1 1 1 1 0 0 1 1 1 1 0 0 0

Would you detect, on looking at it, that it was not the result of “fair coins fairly tossed”? I’d say at first glance, it all looks pretty reasonable. Nor does it conform to any fancy number, like pi in binary. I defy anyone to find a pattern in that run. The reason I so defy you is that it was actually generated by a random process. I had no idea what the sequence was going to be before it was generated, and I’d generated another 99,999 of them before the loop finished. It is the result of a stochastic process, just as the first set were, but this time, the process was different. For this series, instead of randomly choosing the next outcome from an equiprobable “Heads” or “Tails” I randomly selected the length the next run of each toss-type from the values 1 to 5, with an equal probability of each length. So I might get 3 Heads, 2 Tails, 5 Heads, 1 Tail, etc. This means that I got far more runs of 5 Heads than I did the first time, but far fewer (infinitely fewer in fact!) runs of 6 Heads! So ironically, the lack of very long runs of Heads is the very clue that tells you that this series is not the result of the process “fair coins, fairly coins”.

But it IS a “chance” process, in the sense that no intelligent agent is doing the selecting, although in intelligent agent is designing the process itself – but then that is also true of the coin toss.

Now, how about this one?

Prizes for spotting the stochastic process that generated the series!

The serious point here is that by rejecting a the null of a specific stochastic process (fair coins, fairly tossed) we are a) NOT rejecting “chance” (because there are a vast number of possible stochastic processes). “Chance” is not the null; “fair coins, fairly tossed” is.

However, the second fallacy in the “500 coins” story, is that not only are we not rejecting “chance” when we reject “fair coins, fairly tossed”) but nor are we rejecting the only alternative to “Intelligently designed”. We are simply rejecting one specific stochastic process. Many natural processes are stochastic, and the outcomes of some have bell-curve probability distributions, and of others poisson distributions, but still others, neither. For example many natural stochastic processes are homeostatic – the more extreme some parameter becomes, the more likely is the next state to be closer to the mean.

The third fallacy is that there is something magical about “500 bits”. There isn’t. Sure if a p value for data under some null is less than 2^-500 we can reject that null, but if physicists are happy with 5 sigma, so am I, and 5 sigma is only about 2^-23 IIRC (it’s too small for my computer to calculate).

And fourthly, the 500 bits is a phantom anyway. Seth Lloyd computed it as the information capacity of the observable universe, which isn’t the same as the number of possible times you can toss a coin, and in any case, why limit what can happen to the “observable universe”? Do IDers really think that we just happen to be at the dead centre of all that exists? Are they covert geocentrists?

Lastly, I repeat: chance is not a cause. Sure we can use the word informally as in “it was just one of those chance things….” or even “we retained the null, and so can attribute the observed apparent effects to chance…” but only informally, and in those circumstances, “chance” is a stand-in for “things we could not know and did not plan”. If we actually want to falsify some null hypothesis, we need to be far more specific – if we are proposing some stochastic process, we need to put specific parameters on that process, and even then, chance is not the bit that is doing the causing – chance is the part we don’t know, just as I didn’t know when I ran my MatLab script what the outcome of any run would be. The part I DID know was the probability distribution – because I specified the process. When a coin is tossed, it does not fall Heads because of “chance”, but because the toss in question was one that led, by a process of Newtonian mechanics, to the outcome “Heads”. What was “chance” about it is that the tosser didn’t know which, of all possible toss-types, she’d picked. So the selection process was blind, just as mine is in all the above examples.

In other words it was non-intentional. That doesn’t mean it was not designed by an intelligent agent, but nor does it mean that it was.

And if I now choose one of those “chance” coin-toss sequences my script generated, and copy-paste it below, then it isn’t a “chance” sequence any more, is it? Not unless Microsoft has messed up (Heads=”true”, Tails=”false”):

true false true false false false false true true false true true false true true true false true false true true true true true false true false false false true true true true true true true true true false false false true true false true true false false false true false false true true false false false true false true false true true true true true true false false true false true true true true true true false true true true false true false false false false false false false false false false false false false false true false false true false true true true false true true true false true true false false true true true true true false true false true false false true true false true true true true true true true false false true false false true false false true true false true true true true false false true false false false false true false false true true false false true false true true false true false true true true true true true true true true true true true true false true false false true false false false true false false true true true false false true true true false true true true false false false true false true false false true false true false true true false true false true false true false false true true true false true true false false false false true false false true false true true true true true false true true true false false false true false true false true true true false false false false false false false true true false true false true false true true false false false true false true true false true false true true true true false true false false false false true false true true true true false false true false true true true true false true true false false true false true false true true true true true false false false true true true false false false true true true false true false true true true true true true true false false true false true false false true true true false true false true true false false true true true false true true false false false true false false false false true false false true true true true false false true false false true true true true false true false false true false true true false false true true false false true true false true false true true false false false false false false false true false true true false false false true true true false false false true false true false false false true true false true false true true true true false true false false false true false true true true false false false false true false true true false true true true false true true false true false false true false true false true true false false true false true false true false false true true false false

I specified it. But you can’t tell that by looking. You have to ask me.

ETA: if you double click on the images you get a clear version.

ETA2: Here’s another one – any guesses as to the process (again entirely stochastic)? Would you reject the null of “fair coins, fairly tossed”?

0 1 0 1 0 1 0 1 0 0 1 1 1 1 1 0 1 0 1 1 1 1 0 0 0 1 0 1 1 1 0 1 0 0 0 1 1 0 0 0 1 1 0 0 0 1 0 1 1 0 1 0 0 1 1 0 1 1 0 1 1 0 1 0 1 0 0 1 1 0 0 1 0 0 1 0 0 0 1 1 0 1 1 0 0 0 1 1 1 0 1 0 0 0 1 0 1 1 0 1 1 0 1 1 0 0 0 1 1 1 1 0 0 0 0 0 1 1 0 0 1 0 1 1 1 1 0 0 1 1 0 1 1 0 0 1 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 1 0 0 0 1 0 1 0 0 1 1 1 0 0 0 0 1 1 0 0 1 0 1 0 1 1 0 0 1 1 1 0 1 0 0 0 1 1 0 1 0 1 0 0 1 0 1 1 1 0 0 1 0 1 0 1 0 0 1 0 1 1 0 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 1 1 1 0 1 0 0 0 0 1 1 1 0 1 0 0 1 0 1 1 0 0 0 1 1 0 0 1 0 0 0 1 1 1 0 0 1 0 0 1 1 1 1 0 0 0 0 1 1 0 1 0 0 0 1 0 1 1 1 0 0 1 0 0 0 1 0 1 1 0 1 0 1 1 0 1 1 1 0 1 0 1 0 0 0 0 1 1 1 1 1 0 1 0 1 1 0 0 1 1 0 1 1 1 0 1 1 0 0 1 1 0 1 0 1 0 1 0 0 0 1 0 1 0 1 1 1 1 0 0 1 0 1 0 1 1 1 0 1 0 0 1 0 0 1 1 1 0 0 1 1 0 0 1 0 0 0 1 0 0 1 0 1 0 1 1 0 0 0 0 1 1 0 0 0 1 0 1 1 1 0 1 0 1 0 0 1 1 0 1 1 0 0 0 1 0 1 1 0 0 1 1 0 1 0 0 1 0 0 1 1 1 0 1 0 0 1 0 1 0 1 0 1 1 0 1 0 1 1 0 0 1 1 1 0 1 0 0 0 0 1 0 1 1 1 1 0 1 0 0 0 1 1 1 0 0 1 1 0 1

Barry? Sal? William?

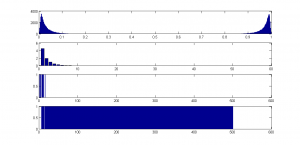

ETA3: And here’s another version:

The top plot is the distribution of proportions of Heads.

The second plot is the distribution of runs of Heads

The bottom two plots represent two runs; blue bars represent Heads.

What is the algorithm? Again, it’s completely stochastic.

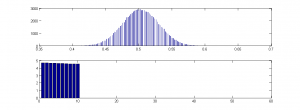

And one final one:

which I think is pretty awesome! Check out that bimodality!

which I think is pretty awesome! Check out that bimodality!

Homochirality here we come!!!

That’s a good point. You can’t just say “this is incompatible with the chance hypothesis” as there is more than one. You have to specify which.

Furthermore, the term “chance” is vague. Many natural processes are nether fully “chance” nor fully deterministic. They form a continuous spectrum.

One can see how it works in the Ising model of a ferromagnet, a favorite toy of statistical physicists. It is somewhat similar to the examples in Lizzie’s post as it uses binary variables, magnetic moments, a.k.a. spins, that can point up or down. The spins are arranged on a square grid. Each spin interacts with its four neighbors only. If two adjacent spins point in the same direction, the energy goes down by 1 unit; if one is up and the other down the energy goes up by 1 unit. These are the basic rules.

Different “chance hypotheses” in this case are different thermal ensembles. An ensemble is a probability distribution for microstates, which are the equivalents of coin sequences.

The simplest probability distribution is the canonical ensemble at infinite temperature. Every microstate is as likely as any other. This is the equivalent of tossing fair coins. The typical state is well-balanced: about the same number of spins point up and down. In a system with N spins, the imbalance between the up and down spins is roughly the square root of N. Observing large imbalances (a given fraction of N, say N/10) is extremely unlikely for large N.

The opposite end of the chance—determinism spectrum is the microcanonical ensemble with the lowest possible energy (or, equivalently, zero temperature). That means every spin has all four neighbors pointing the same way. There are only two such states: all spins up and all spins down, like all heads or all tails.

And then there is a continuous spectrum of thermal ensembles between these two extremes. A microcanonical ensemble is an ensemble of microstates with a given energy. All microstates of that energy are equally likely. So it is both deterministic (fixed energy) and random (anything goes otherwise). Depending on the amount energy, the typical state of a microcanonical ensemble can resemble either the fully disordered state at infinite temperature or the ordered state at zero temperature. If the energy is high, we observe equal numbers of spins pointing up and down, with imbalances of order of the square root of N. However, if the energy is low, there is an imbalance between the up and down spins that is of order N (e.g., 75 percent of spins point up and 25 down).

So when ID proponents say “we reject the chance hypothesis,” we should always ask which chance hypothesis do they mean.

Well, we do. And Dembski’s answer is “the relevant chance hypothesis”.

Which is absolutely useless.

More fundamentally flawed is the idea that once you have rejected some “relevant chance hypothesis” that the only remaining hypothesis is “Intelligent Design”.

It’s part because of conflating “chance” with “unintelligent” or “unintentional”.

They need to say what they mean. But if they do, their argument collapses.

Barry has been vague about where one goes from rejecting independent tossing of coins. Does rejecting “chance” in the case of coins mean that one can then reject a “chance hypothesis” in the case of the evolution of DNA sequences? DNA sequences have natural selection, which coins do not. In the case of DNA sequences that code for a very-nonrandom adaptation, is natural selection part of Barry’s “chance”, or does that include only mutation and genetic drift?

There is no way that “chance” (if one means mutation and genetic drift) could ever produce a fish that can swim or a bird that can fly. But if “chance” includes natural selection that is a very different matter.

Sal Cordova, in a UD post ((here) has been crowing that “the 500-fair-coins-heads illustration has been devastating to the materialists”. But even he does not try to say that this illustration shows that natural selection cannot bring about adaptations — he spends most of the post going on about homochirality of amino acids.

There’s a nice Letters piece in Nature about that (I’m sure you know of it).

Thermodynamic control of asymmetric amplification in amino acid catalysis

I’ve added another distribution to my OP which is actually slightly relevant, but I won’t say for now what the algorithm was! I’ll leave it as a challenge to the reader….

And I’m running yet another one now! This one is even more relevant to the homochirality issue.

I do wonder what mechanism they have in mind that can hook up amino acids correctly, but cannot distinguish isomers, being instead at the mercy of some kind of imaginary ‘coin-toss’. The isomer of an L amino acid is nothing special. On one side, it looks just like glycine, having an -H where L acids have their side chain. One the other, it has a bit of a ‘growth’ – a side chain where L acids just have hydrogen. If you can distinguish side chains well enough to get the correct L acid in place despite the presnce of other different side chains, you can just as easily exclude ‘glycine-like’ side chains (ie: all D acids) along with glycine itself. You’re more likely to mistake a D acid for glycine than for its isomer, since at the atomic level the molecules cannot be weighed or stuck in polarised light or shoved through an electrophoretic gel or whatever other fantasy mechanism they import from a ‘macro-world’ chemistry lab. If they give the practicalities any thought at all, that is.

There are real problems with ‘peptides-first’ scenarios, but this isn’t one of them.

But as a general point, feedback loops abound in the natural world, both within and outside living systems, and some of these result in “regression towards the mean” or homeostasis, and some accelerate away from it. There’s no reason in principle why a chemical system shouldn’t result end up assymmetrical if there are feedback loops involved.

And it doesn’t violate the 2nd law unless a tornado does, which it doesn’t! And a tornado isn’t a living thing. Are tornadoes designed?

“This, I suggest, is profoundly fallacious.”

It’s also incredibly boring and irrelevant to the larger and more meaningful questions for human beings.

Wait, will Lizzie ever elevate herself to ask larger questions here at TSZ?

It seems that for now, at least, most people here are simply interested in narrow IDist opposition.

Coin-tossing/flipping is a frickin’ joke.

Well, it may be boring to you, Gregory, but to a scientist it’s very important. Given that the bulk of science proceeds by null hypothesis testing, it’s really important to define your null precisely, otherwise you will not know what it is you have retained or rejected when you retain or reject it.

And stochastic processes are fascinating, and stochastic processes with feedback even more so. Evolutionary processes are an example of the latter.

So it’s quite a “large” question. But feel free to ask a “larger” one if you like – although if it’s “what is the definition of the word “Darwinism?”, my reaction is – booorring….

ETA: but to be fair, this dead horse probably needs a break, and you are welcome to try to persuade me that what various people mean by “Darwinism” is an interesting and enlightening question 🙂

Could I suggest you start your own blog? If you are not happy with what most people here are into, then why are you here at all?

“as a ‘Darwinist’ I am…” – Lizzie

All that means is: Lizzie is a leprechaun. Lazy Mazzie. No deep thinking allowed.

Lizzie’s definition of ‘Darwinist’ is so primitive as to be laughable; ‘Darwinist’ = ‘Darwin’s theory’. Only the un(der-)educated or un-curious would gladly accept it.

She’s defending obscurantism with her self-label, Darwin my British hero! And she obviously doesn’t want to rise (after throwing away her Catholic youth) beyond Darwin’s 19th c. ideas. Witness her flip-flop philosophy participation in the ‘Darwinism’ thread on her own blog!

Sorry, Lizzie, but on this topic, you’re quite obviously impotent and immature. (Now sic the Darwinist TSZ hounds to bark otherwise!)

Well, 500 coins do eventually lose their sparkle, I have to agree. But my inner nerd remains fascinated. And my inner quantitative methodology teacher remains obsessive.

My inner psychologist is also not unengaged….

“the bulk of science proceeds by null hypothesis testing”

Yeah, I was doing some of that yesterday.

Care to do some sociology of science now Lizzie and calculate your spurious claim regarding “the bulk of science”? No, I didn’t think so.

Where I disagree with you, Gregory, is on the nature of a “definition”. I’m a scientist these days, and for a scientist, words are as Humpty Dumpty uses them – they mean what the writer chooses them to mean. And as long as the writer gives the definition, there shouldn’t be any problem.

Problems arise when people interpret a word used in one sense with the way it is used in another, and therefore misunderstand the writer. This happens all the time in conversations about evolution with IDists – and some of that is the fault of the writers, and some the fault of the readers.

But the fact remains that language is a living thing, and there are no canonical definitions, even in dictionaries, which record usage, they do not prescribe it.

You were doing some null hypothesis testing yesterday, Gregory? Cool. What was your null?

“My inner psychologist”

Oh, so you are sometimes reflexive then.

The main point of your Blog is not just OBJECTIVE NATURAL SCIENCE, even while the loudest voices here are scientistic, naturalistic and positivistic according to their worldviews?

I don’t know what you mean by this. Can you explain?

I don’t know what you mean by this either. Can you explain?

Gregory,

The fair-coin example may be boring, but that’s about the only thing ID fans at UD are able to wrap their heads around, so far anyway. Lizzie is trying to go beyond this simple model and demonstrate that there are other chance hypotheses.

My addition of the Ising model is an attempt to go to more interesting examples where chance yields something genuinely more interesting. In this case, spontaneous symmetry breaking. Although we may start with equal numbers if up and down spins, at low temperatures the system spontaneously chooses to have more up spins than down ones, or vice versa. This has direct implications for the problem of homochirality. It could emerge spontaneously, just like order in a ferromagnet.

This is why we discuss these other examples here.

Gregory,

You’ve got posting privileges, Gregory. Have at it.

P.S. You’re not making a lot of sense in this thread. Please be more careful if you write an OP.

The Ising model is nice. My last example is also an algorithm that tends to break symmetry.

I must play with yours.

Lizzie,

You can find Java applets that simulate the Ising ferromagnet out there on the Web. Including my own. Google Javalab Ising.

One thing that strikes me is that what we are doing here goes beyond Dembski’s clunky “specification” prescription – we have a series of identifiable patterns here, all of which are highly unlikely to be generated by a “fair coins, fairly tossed”, but none of which are either pre-specified or “semiotically” specified. They simply result from stochastic processes with different properties, and can be detected by careful analysis of more subtle distributions than simply that of the proportions of heads and tails.

Presumably Dembski realises that, and has moved beyond CSI, as he moved beyond the EF, although never quite having the courage to admit their flaws, merely their outdatedness.

I guess his response would be that while all these stochastic processes are possible, there is still the question of how certain stochastic processes – those that produced living things, for example, if they did) came to be found – how the “search for a search” was conducted.

The trouble here for Dembski is that while it may well be that the kinds of stochastic processes that tend to produce living things may be “built in” to the properties of our particular patch of universe, and possibly all of it, there is no way to put an upper limit on the number of trials (no “UPB”, spurious though the 500 bits are) you’d need to come up with a set of stochastic processes that produced us.

And also, no way of knowing how many trials there were, or whether God picked one of the gazillion possibles that worked. If so, there’s no way of telling, from within this universe, whether God picked it or whether it just took until ours turned up for us to notice.

And in any case, it makes the whole Darwin-OOL thing moot.

Thanks!

To me the interest in the 500 coins discussion is in finding how Barry and co. go from being able to reject independent tosses when we see 500 Heads, to being able to reject natural selection as the explanation for biological adaptations.

They are certainly making noises implying that they can do this. But the next step in the argument is missing.

That’s what scientists often do: go from one logical step to another to see how an argument works.

They often quote Durston et al. But Durston et al, although they use non-uniform frequencies of amino acids, still assume random draw from that frequency distribution.

I have no idea why Gregory wants to be so rude about your “elevating” this blog, but this seems plenty elevated to me.

Everyone but you seems interested. Go start your own blog to ask ‘the big questions’. Bye.

I think that a key concept that is missing from all ID/creationist “calculations” of the “improbability” of events is the fact that atoms and molecules interact.

It has been quite fascinating to watch year after year, for something like 50 years now, ID/creationists using things like coins, marbles, Scrabble letters, junkyard parts, and battle ship parts as representatives for the properties and behaviors of atoms and molecules.

They deny emphatically that they have this misconception, but everything they assert about molecular assemblies betrays the misconception. For example, in Cordova’s hyper confident post over at UD, he makes this assertion:

This is the way that ID/creationists think about chemistry and physics. It’s habitual. When it comes to complex molecules, everything is formed out of an “ideal gas” of inert particles, and the “probabilities” calculated by using coins and other inert objects apply. Yet they tell us that crystals form “naturally”.

Somewhere along the spectrum of complexity in molecules, atoms and molecules cease to interact and must be guided by intelligence, or semaphores, or some other non-natural process.

I am not a psychologist; but there is more to this misconception than simply ignorance and avoidance of basic physics and chemistry. The misconception is persistent over decades; and it appears impossible to dislodge. No ID/creationist will go near any basic concept test of scientific concepts.

All of the teaching organizations and professional societies in science, math, and engineering now have educational divisions and forums dedicated to dealing with misconceptions and the educational process. As far as I know, none deal with this particular issue.

It has always been a routine tactic of ID/creationists, ever since Morris and Gish, to taunt scientists into debates by using outrageous caricatures of science, and caricatures of “speciation” and “randomness” and the “second law.”

I’m wondering if the over-the-top posts we are seeing over at UD – especially since Kitzmiller vs. Dover – are simply extreme, desperate attempts to keep a dying ID/creationism in the spotlight. Corrections to their misconceptions and misrepresentations never stick but are instead met with more bluster.

I once read a very interesting article about bacterial flagella, which demonstrated that for a bacterium, the medium isn’t a fluid as we experience it, but a sort of fight through a sea of jiggly balloons (my metaphor). So the flagellum acts nothing like a “propeller” but more like a rudder, increasing the probability of going forward rather than back, or left rather than right.

I might have got that wrong, but it was a salutory read, reminding me that things don’t scale linearly beyond certain limits!

Kinda off topic can someone invite the new guy at UD, Jaceli123, either here or to another pro-science site like TalkRational Life Sciences? The kid’s only a teenager looking to understand science but BA77 and GEM are directing him to nothing but IDiot/Creationist bullshit propaganda sites. Sorry but it just pisses me off to see a young interested student lied to like that.

Here is a talk by Edward Purcell honoring Victor Weisskopf.

Weisskopf gave a talk in a colloquium at the University of Michigan back when I was there working on the electron g-factor experiment. The title of the talk was “Life at Low Reynolds Number.”

Conservatives tend to be conservative about concepts. They take concepts to be rigid. And as long as they do this, they will have trouble with science, where conceptual change is important.

Conservative Christians go further. They go with John 1:1 – “In the beginning was the Word, and the Word was with God, and the Word was God.” So they take language and concepts as coming directly from God. They tend to see language (with assumed rigid concepts) to be primary, and mere matter just an inconvenience.

Sal Cordova hasn’t explained himself very well. But he seems to take life as information. And if information is just a string of bits, then those bits don’t have to all be of the same chirality. The idea of binding simply doesn’t come into this way of thinking. But it is good entertainment to watch.

OK, it’s really peripheral but I can’t stand it.

In a lot of cosmology theories everything is at the center of the Universe.

Well, presumably everything is at the centre of its own observable universe.

Ah, thanks, that was probably it.

I think the same problem afflicts Granville Sewell’s argument for Second-Law-like equations that show evolution can’t make complex organisms.

He defines his “X-entropies”, one for each element or compound. These are supposedly increasing. So carbon (for example) is supposed to be becoming more and more randomly distributed. So, supposedly, are other elements and compounds.

The problem is that there is no term in his X-entropy equations for carbon to interact with other chemicals. No carbon dioxide, no methane, no carbohydrates. In short, he’s left out chemistry. If his equations were the whole story for carbon, there could be no life.

(Mike, I hope you will correct me if I have misunderstood Sewell’s argument).

My question is, will Gregory ever stop talking about his desire to talk about something interesting, and actually talk about something interesting? His own threads have been snooze-fests — promising great things, but ending up saying nothing.

Any one going to take a punt on what the algorithms were that produced my distributions?

Updated with yet another distribution of runs, again with a mean of 50% heads. But this time, extreme proportions are more likely that even proportions.

But not because the algorithm is any more or less “intelligently designed” than the coin toss. It’s just yet another kind of stochastic process we see in nature, including non-living nature, daily.

It is more than that.

Do go on

You have it right.

Sewell can’t even get units right. Carbon concentration – or any other atom or molecular concentration, for that matter – will have units of reciprocal volume.

Entropy, if expressed in traditional units, will have units of energy divided by temperature. If one chooses to express temperature in units of energy, then entropy is dimensionless.

So Sewell’s “X-entropies” are meaningless. And, as you rightly point out, nothing in his “calculation” allows for things like chemical potentials, chemical reactions, or atomic and molecular interactions of any kind.

Students in high school physics and chemistry classes, and in the introductory courses in science and engineering in colleges and universities, are taught to check units.

Sewell’s mistakes are so egregious that I am surprised that none of the engineers or physicists he claims he consulted corrected him. I have my doubts that Sewell consulted anyone; or if he did, they “politely” kept their distances from him.

Moved some banter to guano. Gregory is more than welcome to post an OP on any topic he finds interesting. But this one is about probability distributions.

Incidentally; Sewell and other ID/creationists think “concentrations” drive molecular motion. The misconception is similar to “chance” being a cause of something.

These repeated misconceptions are also clear indicators of how ID/creationists think about the behaviors of atoms and molecules. The notion of the inertness of atoms and molecules is always in the back of their minds when they automatically jump to things like coin flips to calculate chemical outcomes.

Exactly.

Re the “chance” thing – I think the reason Barry is in a muddle is that on the one hand he thinks that evolutionary theory is “chance did it”, and that it’s cobblers, because chance can’t do anything, but he still thinks you can reject a chance hypothesis.

The idea that the evolutionary theory invokes chance as a cause is so ingrained in ID thinking that at least some IDists can’t seem to get their heads round the idea that rejecting “chance” doesn’t defeat Darwinism!

But the reason you can’t reject “chance” as a null is exactly the same as the reason why Dembski isn’t rejecting evolutionary theory when he rejects “chance”, and is also the reason that IDists totally misunderstand evolutionary theory, and rightly see that what they think it is makes no sense.

It doesn’t.

Lizzie I agree with your post, it explains what we can know from a scientific approach. But if you sat this:

“The part I DID know was the probability distribution – because I specified the process. When a coin is tossed, it does not fall Heads because of “chance”, but because the toss in question was one that led, by a process of Newtonian mechanics, to the outcome “Heads”. What was “chance” about it is that the tosser didn’t know which, of all possible toss-types, she’d picked. So the selection process was blind, just as mine is in all the above examples.”

then you have to admit tha Gould saying this was wrong:

“Humans are not the end result of predictable evolutionary progress, but rather a fortuitous cosmic afterthought, a tiny little twig on the enormously arborescent bush of life, which, if replanted from seed, would almost surely not grow this twig again, or perhaps any twig with any property that we would care to call consciousness.”

And many darwinists beleive what Gould said. If I would have time I could quote you saying similar thins here.

Because the entire Universe was once something incredibly small and currently not understandable, in a very real sense everything is in the same place; the center of the Universe.

No, Blas, you’ve got it exactly backwards. It’s exactly because we can’t predict the outcome of any iteration of a stochastic process (coin flips or meiosis) that we do not expect an identical run of results – if we could go back and “rerun the tape” as Gould says.

You can test this for yourself. Flip a fair coin 100 times and record the sequence. Now flip the same coin another 100 times and record that sequence, too. Are the two sequences identical? Of course not., And yet, each flip was directly controlled by known physical forces. Chance is not a mechanism. Chance did not cause any of the flips to wobble towards one side or the other.

Now perform a similar test with your gametes. [You can do this as a thought experiment if the actual would be too messy or too expensive] Get just 1 of your gametes. Get the DNA sequences, all 3 billion pairs. Now get another sample. Are the sequences identical? No, of course not. And yet, the production of your gametes was directly controlled by known physical forces – biochemistry, that is, molecules interacting because of electromagnetic force. Chance is not a mechanism. Chance did not cause any of the DNA bases to slip out of line and be replaced by another.

We don’t expect that you can reproduce yourself with exact fidelity. If we “rerun the tape” of life, we don’t expect to get the exact same two children as a result, anymore than we expect to get the exact same two sequences of coin flips. How wonderful that, when your parents ran their tape of life, the known physical forces made their gametes and happened to be the ones which resulted in you specifically!

Gould extrapolates those quite simple tests to the entire record of life. We have no reason to expect if we started over again with, say, the primitive mammals following the Chicxulub impact, that imperfect DNA copying would be imperfect in exactly the same way twice, leading predictably to something indistinguishable from our own form of humanity. Something like tree-climbing primates with grasping hands? Well, probably; it’s an obvious ecological niche waiting to be exploited as soon as the mutations arose which could then be selected for it. Communicative social behavior? Well, probably; it’s another obvious ecological niche. Proto humans who look like us more than like gorillas? Hmm, maybe not.

OK thanks 🙂