My problem with the IDists’ 500 coins question (if you saw 500 coins lying heads up, would you reject the hypothesis that they were fair coins, and had been fairly tossed?) is not that there is anything wrong with concluding that they were not. Indeed, faced with just 50 coins lying heads up, I’d reject that hypothesis with a great deal of confidence.

It’s the inference from that answer of mine is that if, as a “Darwinist” I am prepared to accept that a pattern can be indicative of something other than “chance” (exemplified by a fairly tossed fair coin) then I must logically also sign on to the idea that an Intelligent Agent (as the alternative to “Chance”) must inferrable from such a pattern.

This, I suggest, is profoundly fallacious.

First of all, it assumes that “Chance” is the “null hypothesis” here. It isn’t. Sure, the null hypothesis (fair coins, fairly tossed) is rejected, and, sure, the hypothesized process (fair coins, fairly tossed) is a stochastic process – in other words, the result of any one toss is unknowable before hand (by definition, otherwise it wouldn’t be “fair”), and both the outcome sequence of 500 tosses and the proportion of heads in the outcome sequence is also unknown. What we do know, however, because of the properties of the fair-coin-fairly-tossed process, is the probability distribution, not only of the proportions of heads that the outcome sequence will have, but also of the distribution of runs-of-heads (or tails, but to keep things simple, I’ll stick with heads).

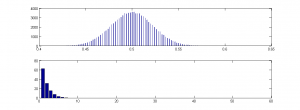

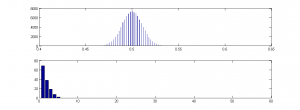

And in fact, I simulated a series of 100,000 such runs (I didn’t go up to the canonical 2^500 runs, for obvious reasons), using MatLab, and here is the outcome:

As you can see from the top plot, the distribution is a beautiful bell curve, and in none of the 100,000 runs do I get anything near even as low as 40% Heads or higher than 60% Heads.

As you can see from the top plot, the distribution is a beautiful bell curve, and in none of the 100,000 runs do I get anything near even as low as 40% Heads or higher than 60% Heads.

Moreover, I also plotted the average length of runs-of-heads – the average is just over 2.5, and the maximum is less than 10, and the frequency distribution is a lovely descending curve (lower plot).

If therefore, I were to be shown a sequence of 500 Heads and Tails, in which the proportion of Heads was:

- less than, say 40%, OR

- greater than, say 60%, OR

- the average length runs-of-heads was a lot more than 2.5, OR

- the distribution of the proportions was not a nice bell curve, OR

- the distribution of the lengths of runs-of-heads was not a nice descending Poisson like the one in lower plot,

I would also reject the null hypothesis that the process that generated the sequence was “fair coins, fairly tossed”. For example:

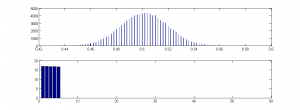

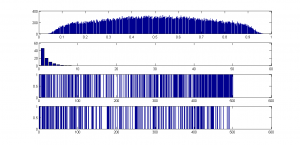

This was another simulation. As you can see, the bell curve is pretty well identical to the first, and the proportions of heads are just as we’d expect from fair coins, fairly tossed – but can we conclude it was the result of “fair coins, fairly tossed”? Well, no. Because look at the lower plot – the mean length of runs of heads is 2.5, as before, but the distribution is very odd. There are no runs of heads longer than 5, and all lengths are of pretty well equal frequency. Here is one of these runs, where 1 stands for Heads and 0 stands for tails:

This was another simulation. As you can see, the bell curve is pretty well identical to the first, and the proportions of heads are just as we’d expect from fair coins, fairly tossed – but can we conclude it was the result of “fair coins, fairly tossed”? Well, no. Because look at the lower plot – the mean length of runs of heads is 2.5, as before, but the distribution is very odd. There are no runs of heads longer than 5, and all lengths are of pretty well equal frequency. Here is one of these runs, where 1 stands for Heads and 0 stands for tails:

1 0 1 1 1 0 0 1 1 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 1 1 1 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 1 0 0 0 0 0 1 1 1 1 1 0 1 1 1 1 0 0 0 1 1 1 1 0 0 0 0 0 1 1 0 1 1 1 1 1 0 0 0 0 1 0 1 1 0 0 0 0 0 1 1 0 0 0 0 1 1 1 1 1 0 0 1 1 1 1 0 0 0 1 1 0 0 0 1 1 0 0 1 1 0 1 1 1 1 1 0 1 1 0 0 0 1 1 1 1 0 1 1 1 1 1 0 0 0 0 0 1 0 1 0 0 1 1 1 1 0 1 1 1 1 0 0 0 0 1 1 0 0 0 0 0 1 1 0 0 0 1 0 1 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 1 1 0 0 0 0 0 1 1 1 0 0 0 0 1 1 0 0 0 0 1 1 0 1 0 0 0 1 1 1 0 0 0 0 0 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 1 0 1 1 1 1 1 0 1 1 1 1 0 0 0 0 0 1 1 1 1 0 0 0 0 1 1 1 0 0 0 1 1 1 0 0 0 0 1 0 0 0 1 1 1 1 1 0 0 0 0 0 1 1 0 0 0 1 1 0 0 0 0 1 1 1 1 1 0 1 0 0 1 1 0 0 0 1 1 0 0 0 0 1 1 0 0 0 1 1 1 0 0 1 1 1 1 0 0 0 0 0 1 1 0 0 1 1 1 1 1 0 0 0 0 1 1 1 0 1 1 1 0 0 0 0 1 1 1 1 0 0 0 0 1 1 0 0 0 1 1 1 0 0 0 0 1 1 1 1 0 0 1 1 1 0 0 1 0 0 0 0 1 1 1 0 0 0 0 0 1 1 1 1 0 0 1 1 1 1 0 0 0

Would you detect, on looking at it, that it was not the result of “fair coins fairly tossed”? I’d say at first glance, it all looks pretty reasonable. Nor does it conform to any fancy number, like pi in binary. I defy anyone to find a pattern in that run. The reason I so defy you is that it was actually generated by a random process. I had no idea what the sequence was going to be before it was generated, and I’d generated another 99,999 of them before the loop finished. It is the result of a stochastic process, just as the first set were, but this time, the process was different. For this series, instead of randomly choosing the next outcome from an equiprobable “Heads” or “Tails” I randomly selected the length the next run of each toss-type from the values 1 to 5, with an equal probability of each length. So I might get 3 Heads, 2 Tails, 5 Heads, 1 Tail, etc. This means that I got far more runs of 5 Heads than I did the first time, but far fewer (infinitely fewer in fact!) runs of 6 Heads! So ironically, the lack of very long runs of Heads is the very clue that tells you that this series is not the result of the process “fair coins, fairly coins”.

But it IS a “chance” process, in the sense that no intelligent agent is doing the selecting, although in intelligent agent is designing the process itself – but then that is also true of the coin toss.

Now, how about this one?

Prizes for spotting the stochastic process that generated the series!

The serious point here is that by rejecting a the null of a specific stochastic process (fair coins, fairly tossed) we are a) NOT rejecting “chance” (because there are a vast number of possible stochastic processes). “Chance” is not the null; “fair coins, fairly tossed” is.

However, the second fallacy in the “500 coins” story, is that not only are we not rejecting “chance” when we reject “fair coins, fairly tossed”) but nor are we rejecting the only alternative to “Intelligently designed”. We are simply rejecting one specific stochastic process. Many natural processes are stochastic, and the outcomes of some have bell-curve probability distributions, and of others poisson distributions, but still others, neither. For example many natural stochastic processes are homeostatic – the more extreme some parameter becomes, the more likely is the next state to be closer to the mean.

The third fallacy is that there is something magical about “500 bits”. There isn’t. Sure if a p value for data under some null is less than 2^-500 we can reject that null, but if physicists are happy with 5 sigma, so am I, and 5 sigma is only about 2^-23 IIRC (it’s too small for my computer to calculate).

And fourthly, the 500 bits is a phantom anyway. Seth Lloyd computed it as the information capacity of the observable universe, which isn’t the same as the number of possible times you can toss a coin, and in any case, why limit what can happen to the “observable universe”? Do IDers really think that we just happen to be at the dead centre of all that exists? Are they covert geocentrists?

Lastly, I repeat: chance is not a cause. Sure we can use the word informally as in “it was just one of those chance things….” or even “we retained the null, and so can attribute the observed apparent effects to chance…” but only informally, and in those circumstances, “chance” is a stand-in for “things we could not know and did not plan”. If we actually want to falsify some null hypothesis, we need to be far more specific – if we are proposing some stochastic process, we need to put specific parameters on that process, and even then, chance is not the bit that is doing the causing – chance is the part we don’t know, just as I didn’t know when I ran my MatLab script what the outcome of any run would be. The part I DID know was the probability distribution – because I specified the process. When a coin is tossed, it does not fall Heads because of “chance”, but because the toss in question was one that led, by a process of Newtonian mechanics, to the outcome “Heads”. What was “chance” about it is that the tosser didn’t know which, of all possible toss-types, she’d picked. So the selection process was blind, just as mine is in all the above examples.

In other words it was non-intentional. That doesn’t mean it was not designed by an intelligent agent, but nor does it mean that it was.

And if I now choose one of those “chance” coin-toss sequences my script generated, and copy-paste it below, then it isn’t a “chance” sequence any more, is it? Not unless Microsoft has messed up (Heads=”true”, Tails=”false”):

true false true false false false false true true false true true false true true true false true false true true true true true false true false false false true true true true true true true true true false false false true true false true true false false false true false false true true false false false true false true false true true true true true true false false true false true true true true true true false true true true false true false false false false false false false false false false false false false false true false false true false true true true false true true true false true true false false true true true true true false true false true false false true true false true true true true true true true false false true false false true false false true true false true true true true false false true false false false false true false false true true false false true false true true false true false true true true true true true true true true true true true true false true false false true false false false true false false true true true false false true true true false true true true false false false true false true false false true false true false true true false true false true false true false false true true true false true true false false false false true false false true false true true true true true false true true true false false false true false true false true true true false false false false false false false true true false true false true false true true false false false true false true true false true false true true true true false true false false false false true false true true true true false false true false true true true true false true true false false true false true false true true true true true false false false true true true false false false true true true false true false true true true true true true true false false true false true false false true true true false true false true true false false true true true false true true false false false true false false false false true false false true true true true false false true false false true true true true false true false false true false true true false false true true false false true true false true false true true false false false false false false false true false true true false false false true true true false false false true false true false false false true true false true false true true true true false true false false false true false true true true false false false false true false true true false true true true false true true false true false false true false true false true true false false true false true false true false false true true false false

I specified it. But you can’t tell that by looking. You have to ask me.

ETA: if you double click on the images you get a clear version.

ETA2: Here’s another one – any guesses as to the process (again entirely stochastic)? Would you reject the null of “fair coins, fairly tossed”?

0 1 0 1 0 1 0 1 0 0 1 1 1 1 1 0 1 0 1 1 1 1 0 0 0 1 0 1 1 1 0 1 0 0 0 1 1 0 0 0 1 1 0 0 0 1 0 1 1 0 1 0 0 1 1 0 1 1 0 1 1 0 1 0 1 0 0 1 1 0 0 1 0 0 1 0 0 0 1 1 0 1 1 0 0 0 1 1 1 0 1 0 0 0 1 0 1 1 0 1 1 0 1 1 0 0 0 1 1 1 1 0 0 0 0 0 1 1 0 0 1 0 1 1 1 1 0 0 1 1 0 1 1 0 0 1 0 1 1 1 1 0 0 0 0 1 1 1 1 0 0 0 1 1 1 1 0 1 0 0 0 1 0 1 0 0 1 1 1 0 0 0 0 1 1 0 0 1 0 1 0 1 1 0 0 1 1 1 0 1 0 0 0 1 1 0 1 0 1 0 0 1 0 1 1 1 0 0 1 0 1 0 1 0 0 1 0 1 1 0 0 0 0 0 0 1 1 1 0 0 0 0 1 1 1 1 1 0 0 0 1 1 1 0 1 0 0 0 0 1 1 1 0 1 0 0 1 0 1 1 0 0 0 1 1 0 0 1 0 0 0 1 1 1 0 0 1 0 0 1 1 1 1 0 0 0 0 1 1 0 1 0 0 0 1 0 1 1 1 0 0 1 0 0 0 1 0 1 1 0 1 0 1 1 0 1 1 1 0 1 0 1 0 0 0 0 1 1 1 1 1 0 1 0 1 1 0 0 1 1 0 1 1 1 0 1 1 0 0 1 1 0 1 0 1 0 1 0 0 0 1 0 1 0 1 1 1 1 0 0 1 0 1 0 1 1 1 0 1 0 0 1 0 0 1 1 1 0 0 1 1 0 0 1 0 0 0 1 0 0 1 0 1 0 1 1 0 0 0 0 1 1 0 0 0 1 0 1 1 1 0 1 0 1 0 0 1 1 0 1 1 0 0 0 1 0 1 1 0 0 1 1 0 1 0 0 1 0 0 1 1 1 0 1 0 0 1 0 1 0 1 0 1 1 0 1 0 1 1 0 0 1 1 1 0 1 0 0 0 0 1 0 1 1 1 1 0 1 0 0 0 1 1 1 0 0 1 1 0 1

Barry? Sal? William?

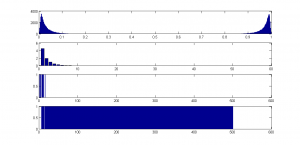

ETA3: And here’s another version:

The top plot is the distribution of proportions of Heads.

The second plot is the distribution of runs of Heads

The bottom two plots represent two runs; blue bars represent Heads.

What is the algorithm? Again, it’s completely stochastic.

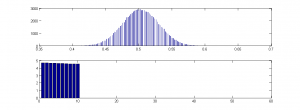

And one final one:

which I think is pretty awesome! Check out that bimodality!

which I think is pretty awesome! Check out that bimodality!

Homochirality here we come!!!

Yes, because the thoughts in your brain are transmitted whole from a platonic realm of pure thought.

Some of us have to do with pattern recognition systems built from neural networks in silly old organic matter.

This is boring. I wonder if we can get phoodoo to come back.

They’re perfectly good for the argument I’m actually making, not for the one you’d prefer I make. The hypothetical challenges I present are easily answered because they are absolutely trivial. My argument is not about “forming a strategy for design detection” because my examples don’t require any strategy for design detection – they are obviously the product of (categorical) design.

IOW, if you found War and Peace written out in shaped molecules in the DNA of an ancient ant extracted from amber, you could only “probably” consider it the result of (categorical) ID?

Yeah. The physical properties of the chemicals make it plausibly likely that the molecules would spell out “Hi, Liz! Whatcha lookin’ at? Maybe some molecules that can only be explained via ID?”

And those properties would also just make it improbable (not implausible) to find War and Peace spelled out verbatim in some DNA. Hmm.

Bullshit.

You see, Liz, your “argument” here hangs on my admission that I am not educated in the pertinent fields of molecular forces. So you say that the nature of those bindings, etc. could be such that they would tend to spell out that phrase, and if so, this would be unknown to me seeing as I’m not educated in those fields.

But, let’s think this through a bit. Seeing as things like oddly shaped rocks on Mars or what looks like a Virgin Mary burned into toast gets instant, widespread news & media coverage, to the point that just about everyone knows about it, is it plausible that molecular research has uncovered a tendency in any DNA for the molecules to spell out coherent sentences in English? Specifically, the one I wrote for the example? I think that even if a very tiny, understandable phrase in English was spelled out in near-perfect molecular formation, I probably would have heard about it. Surely, such information would be all over the internet, at the very least propagated by creationists as proof of ID.

But, all I can find is one well-known hoax about a message from god coded in the DNA (not physically spelled out in English). No pictures of English words. Nothing on creationist sites to even investigate. No websites devoted to the english messages observed in the DNA, nor any rebuttals about how it is the lawful tendency of molecular interactions that makes them form these apparent words and phrases. Nada.

Plus, you have presented no argument, no papers, no photos, no links – again, nada – to support your

bluffcontention that such a find could be because of the natural molecular attractions and bindings make such a configuration likely.I don’t have to be educated in a particular field in order to know – at least in some instances – when someone is bluffing. That may get this post kicked to guano, but then you asked how it is that I call a technical argument absurd – but, the fact about this particular case is that you presented no technical argument, only the facade of one.

That facade is absurd because the notion that such molecular bindings and affinities naturally make it plausible that this sentence in particular is physically spelled out in English somewhere where you – in particular, you, who have a relationship to the content of the phrase – would find it, is patently ridiculous. If such a thing had ever occurred, or occurred with any regularity that would make it likely (just talking about English phrases being spelled out by DNA), the whole internet would be screaming about it non-stop.

Put up or shut up, Liz. Provide me the evidence that we can expect to find naturally-occurring sentences in English physically spelled out in DNA, and direct me to the peer-reviewed papers that describe this peculiar affinity.

Otherwise you’re bluffing.

*points*. *laughs*

Poor old mind-powers.

Shark. Jumped. Wow, I’ve really overestimated you William. I actually, well, no, let’s not go there. I’m embarrassed now.

Heh, I’m done.

Oh Christ, I’d not got to that when I posted me previous comment.

Maybe others would like to respond to this question, too. I’d agree, so long as “some kind of intelligent agency” does not include imaginary beings with assumed abilities that transcend the laws of physics.

I’d certainly reserve judgement on the possibility of hallucination or delusion until observations were confirmed by shared experience. A single human observer can be easily fooled as illusionists such as Derren Brown demonstrate.

The results of some natural phenomena can fool people into thinking some agency other than natural processes are at work. (Giants Causeway, tessellated pavements, sailing rocks etc)

Yes please.

I accept that there theoretically could be, not that there are. For instance, Shakespeare’s sonnets spelled out in some star cluster revealed by Hubble.

However, I’d still put mass hallucinations as a pretty high prior.

This falls out of Bayes’ Rule btw.

P(Intelligent Agent|Comprehensible message apparently space)=P(Comprehensible message apparently in space|Intelligent Agent)*P(Intelligent Agent)

all divided by:

P(Comprehensible message apparently in space|Intelligent Agent)*P(Intelligent Agent) + P(Comprehensible message apparently in space|Not Intelligent Agent)*P(Not Intelligent Agent).

I’d put my prior for Intelligent Agent moderate (I think that we are unlikely to be the only Intelligent agents in the universe), my likelihood of such an agent placing a Comprehensible Message apparently in space as extremely low, and my likelihood for a Comprehensible message apparently in space emerging from something other than an intelligent agent (other than human wishful thinking) as low, but not neglible.

But the value of those priors would depend quite strongly on the details of the scenario, and I can conceive of a scenario where I would say, without much doubt: clearly this is a message from an intelligent agent.

As an example, I think the Medjugorje apparitions, though apparently witnessed by many, and probably having CSI, were nonetheless not the work of anything other than human intelligence.

I’m referring to this:

amongst which you included

I don’t think you understand null hypothesis testing, and I don’t think you understand that it is precisely this null-rejecting process that Dembski himself advocates. Not that you couldn’t do it in your head at a glance if all relevant nulls were sufficiently obviously false, but in practice, as an empirical scientist, I can tell you that confirmation bias is extraordinarily strong, which is why we do tedious things like rigorous statistical testing against some rigorously defined null.

I can provide no evidence (or reasoning) to expect this, unless a genetic engineer put them there.

I’m really not sure what point you are trying to make. I can only assume you must think I am making a different argument to the one I am actually trying to put to you.

Clearly, if there is a vanishingly improbable chance of a striking sequence in English appearing other than by an English speaking agent putting it there, you reject all the alternatives, although you might cursorily consider them first, not least being the hypothesis that you are hallucinating.

What happens when your admitted ignorance causes you to recognize design while trained specialists in the area conclude no evidence of design?

How do you protect against false positives caused by that ignorance?

Thanks for the straightforward answer, AF.

(I’m not being sarcastic.)

In my opinion this is the whole problem with Lizzies argument mode of operating. She will attempt to reconstruct any meaning by confusing it so badly with meaningless technical terms, that you would spend the whole day unwinding her nonsense terms. Like throwing in “non linear chaos’ because she will say well it is a well accepted term in math, as if it somehow has meaning to the conversation. Now you are forced to deal with this completely irrelevant concept, when explaining such an obvious point like that Darwinism is FOUNDED on chaos causing useful innovations. Which everyone with a grain of honesty in their body knows is exactly what neo-darwinian theory says. Its so undeniable that it is pointless to even argue it.

I think that is why her posts are always so long, she can’t say a clear concise point, because its much better to confuse with irrelevant terms. If you try to pin her down to a simple point, you just get more reams of inapplicable nonsense.

And then claim, ‘Well, you just can’t understand it, its technical.’

Hogwash.

phoodoo,

“She will attempt to reconstruct any meaning by confusing it so badly with meaningless technical terms…”

“And then claim, ‘Well, you just can’t understand it, its technical.”

Or consider that the alternate hypothesis that you don’t understand it. You’ve not done well with ‘understanding’ in other threads…

OK, we see. Just like WJM you don’t have the technical expertise/education/knowledge necessary to be persuaded in any way by technical/mathematical/statistical/scientific arguments either. At least he had the sack to admit it.

Ha ha, yea right. That’s why this thread is over a few hundred posts and you still can’t even work out what “fair” means. Sometimes it means the way a coin is tossed, sometimes it means not having intent, sometimes it means not having knowledge, sometimes it means equiprobable, sometimes it means a coin, sometimes, it doesn’t….

And this for ONE word!

The whole presentation of this thread is ridiculous. Lizzie has all these graphs, and charts, to show how look, I can get this outcome and this outcome, see the blue, all is chance…..

And it looks good and all, I mean you can almost baffle someone with its brilliance right. that is if that someone ignores the fact that all the graphs are showing is that if you manipulate the “chance” out of the equations, you get different results. Gee what a surprise! In some she manipulates it a little, in some a lot, so its just different degrees of chance. Big fricking deal.

But if you confuse people enough, it looks great!

Sorry phoodoo, we dumbed down basic probability terminology for you as far as we could without losing all meaning. There’s nothing more we can do if you still don’t understand it.

You seem to be the only one confused by the concepts.

Can you explain what you mean by “if you manipulate the ‘chance’ out of the equations, you get different results”? Aren’t all the examples Lizzie posted completely stochastic processes?

If I found just one coin on a planet I’d conclude it was made by intentional process. A disk, enriched in certain metallic elements, two flattish faces with some design in relief? Oh yes, I know a coin when I see one. An interesting molecule, however …

No problem, William. I recall we had a discussion on crop circles a while ago.

That’s why I thanked you for the straightforward answer – this time.

Hi, Phoodoo:

Let me explain those graphs a little more clearly.

Every single one was generated by a computer script that used a random number generator to select whether heads or tails came next. So every single run was “due to chance”. I never, at any point, could predict whether a head or a tail was coming up next, nor did I ever, at any point, tell the computer which to produce.

In other words, the outcome of every toss was not decided by an “intelligent designer” (me) or even an intelligent computer, but by the computer’s random number generator.

However, the way those random effects were applied had different patterns in each case, just as in nature, including non-living nature.

In the first, the computer simply produced “heads” if the random number generator, which produced a number between zero and 1, was more than .5 and “tails” if it was less than .5. As a result, the distribution of runs of 500 outcomes is exactly like the distribution from actual coin tosses, where the probability of Heads on any given toss is 50:50.

In the next, I did something a little different, although just as “random”. I got the computer to randomly select a number from 1 to 5, just like throwing a five sided die (perhaps I should have set it to 6 as 5-sided dice are rare!) . It would then output that number of Heads. Having done so, it would then select a new number from 1 to five, and output that number of Tails. And then back to Heads again. So if the computer selected, say, 5,2,1,4,4,3, it would output: HHHHHTTHTTTTHHHHTTT.

This random process on average generates the same distribution of Heads:Tails on each run as the first (the “coin-tossing” one), so just by looking at the total number of Heads and Tails, you wouldn’t be able to tell that the coins hadn’t been conventionally tossed. But you can tell by looking at the distribution of consecutive like-tosses, because instead of having a nice Poisson distribution, the distribution is flat to 5 and then drops to zero.

But this doesn’t mean that the process was not entirely due to “chance” if you want to call something as due to chance: if I was watching the process in real time, and I saw a single head thrown, I would still have no clue whether the next would be heads or tails. However, the more heads in a row I saw, the more likely the next one would be tails – and after 5 heads, it would become a certainty, once I’d figured out the process. Again, we see this kind of thing in nature all the time – events “clump” – the longer it has been raining, the more likely it is that it will stop within the next 10 minutes (unless you live in Vancouver….)

In the third example, I weighted the “die” slightly, making longer runs of the same side less likely than shorter runs, with a maximum of 10. This makes it even more like “showers of rain”, but with a rather “unnaturally” precipitous cut-off at 10. I could have made it more gradual.

In the next one, I did something more “natural”, and something we do see all the time in both living and non-living nature: I made each toss partially dependent on previous tosses, so that if random number thrown was less than the than average number of heads over the previous 20 (I think – I’ve forgotten exactly the parameter I used), it would throw a tail, and if not, it would throw a head. As a result, it tended to be “self-correcting” – the more heads there’d been recently, the more likely a tail would be, and vice versa. In other words, the process is “random” but not “independent” – just as in homeostatic systems, both living and non-living. The system tends to “self-correct” to prevent too many of any one side clumping. To a casual observer, this system might look even “more random” than true coin tossing, because long runs of one side are much rarer, and extreme values of Heads:Tails ratio also much rarer. But an astute observer would reject “fair coins, fairly tossed” as the generative process, precisely because the sequences are “too good” for that process. But there’s nothing “intelligent” about it – it’s exactly the same system as the one that makes Old Faithful so “faithful”, or, for that matter, pressures tend to equalise between two communicating air tanks.

Finally, the last examples are even more interesting. Like the previous one I described, each coin toss is non-independent of the average over a number of previous tosses. But instead of Heads being increasingly less likely as the average number of previous Heads goes up, it becomes increasingly more likely. So the system is: if [random number] is less than the average number of heads in the last N “throws”, throw Heads, else throw Tails. This means that instead of being “self-correcting”, as in a homeostatic system, the system tends to get “into a rut” – the more heads that turn up, the more likely the system is to produce yet another head. In the first of these two examples, the N was fairly large, and so while it tended to produce far longer runs of any one side than in previous examples, in each run it would eventually “flip” to the other side. The result is an extremely broad distribution of Heads:Tails ratios, with quite extreme values being quite common, and many long runs of either Heads or Tails in each sequence. Again, this is common in both living and non-living nature – when the jet stream gets into one position, we have long runs of similar weather, until it “flips” (“randomly”) and we get a long run of a different kind of weather. It’s a kind of homeostasis, but instead of having one “attractor basin” in the centre (“regression to the mean”) there are “attractors” at the two extremes.

And in the last run, I made this very extreme, so in most runs, the sequence rapidly flipped to either Heads or Tails, and stayed there for the remainder of the run, although whether it ended up as a “Mostly Heads” run, or a “Mostly Tails” run was just as equiprobable as with the “true coin toss” run. And again, we see this in nature all the time – a vortex, once started as a left-hander or a right-hander, will tend to continue in that “rut” and be very hard to change, even though each may be equiprobable at the outset. And this may explain, for instance, why our universe has mostly matter, not antimatter, or Sal’s “homochirality”. In other words, an entirely “non-intentional” process, which, at the outset, is as likely to produce one end-state, can nonetheless, if there are feedback loops, i.e. State N has an effect on State N+1, get into a “rut” where we can predict, with near-certainty which of the endstates will actually occur.

Which brings me back to my key point: “chance” is all about what we do not know, not about what we do. It is not a causal factor; it does not “explain” anything. It is how we characterise our uncertainty. It has little to do with whether an outcome is “intended” or not, and everything to do with whether we can predict it, and will this change with new data.

In my final example, I would give equal odds for “more heads” to “more tails” as the final outcome before I started But after a few “tosses”, I’d see which way “the wind was blowing” and become much more certain that one outcome would eventuate than the other.

So, to address your point, yes, I “manipulated” chance – but so does the person who tosses a coin, simply by dint of choosing that method of determining the outcome. There are many other “chance” methods, and many do not give equiprobable outcomes, and of those that do, in some, those probabilities change as the run proceeds, as the future can be predicted, partially at least, by the past, as in nature, including inanimate nature. We may not be good weather forecasters, but we aren’t bad, and weather is certainly not “intelligent”! And the fact that we can make only rough guesses means that there is a large element of “chance” involved, to use the word in its “common sense” meaning.

So I’m glad you liked the “brilliance” of my examples, and I’m sorry if I “baffled” you! But my OP makes what I think is a really key point, and one that is regularly overlooked by IDists – or misunderstood.

Non-intentional processes (sometimes called “chance”) can simultaneously be also not the outcome of “necessity” (in my last run, there was absolutely no reason at the outset why any one run should be mostly Heads, or mostly Tails, and in fact those two outcomes had equal probability) and yet produce patterns radically different from the sort of “random mixing” that people think must result from such processes.

And some of them are extremely interesting and very complex – vortices, for instance, or fractals. And, interestingly, can have very low entropy (a tornado for instance).

In other words, non-intelligent processes are perfectly capable of producing the kinds of patterns IDists like to think are the “signature” of ID. And when pressed to pin down those patterns in order to exclude those that are clearly not designed, but the result of some systematic but non-intelligent process, they end up with blatantly circular reasoning: this pattern doesn’t have CSI, because it was highly probable by non-design processes.

Do you see the problem?

What happens when your admitted ignorance causes you to recognize design while trained specialists in the area conclude no evidence of design?

How do you protect against false positives caused by that ignorance?

Bumped for WJM, who seems to have come down with selective post blindness again.

I don’t think there was any material difference in how I answered here and at UD, William. No big deal but I’m curious if you think my statements on crop circles are not consistent.

phoodoo:

To be fair, there are some cases where an anti-IDist will actually present the technical argument (show their work, so to speak, or link to something and actually quote it and explain how it is relevant, etc.). Those cases are rare in my experience. They may have a point, but in those cases I’m no expert and cannot pass meaningful judgement – which is why I limit my criticisms/argument to that which can be successfully argued by other means. That’s not to say I don’t still make mistakes 🙂

Education bluffs are generally easy to spot; the person in question offers no actual technical argument, no links with quotes and explanations, they don’t show their work, they don’t make their case; all they do is point out that their opponent doesn’t have the expertise necessary to parse a hypothetical technical argument, and then carry on as if the fact that their opponent is a layman relieves them of the duty of actually presenting their technical case.

Also, it doesn’t take someone with expertise in biology or statistics to recognize fundamental flaws in logic, such as if they are setting up a straw man at the beginning of their technical argument. For instance, for years a technical argument existed that supposedly rebutted irreducible complexity by showing how precursors for the bacterial flagellum could have evolved and had use before they “came together” to form the flagellum. While I did not have the expertise in the field to critique their argument about how those “could have” evolved, it was easy to see that what they were making a case about was a straw man. It had nothing to do with Behe’s actual argument, which is that natural selection cannot select on the basis of a functional flagellum before there is an actual functioning flagellum, and there is no functioning flagellum without the every part in place and functioning as a flagellum because if you take away any part, the flagellum doesn’t work as a flagellum. That the individual parts could have existed elsewhere, doing other things, before the flagellum was “fully constructed” and operational is a non-sequitur.

Another point: making the case that something “could have” evolved (bare possibility) is not making the case that such a sequence is scientifically plausible. There are simple logical errors even a layman can find in technical arguments that require no technical expertise.

If you don’t understand them, how do you know they are “meaningless”? If I see a page in arabic, I have no idea whether it is gibberish or not. It might be, or it might not be.

I put to you that my terms are not gibberish at all, and I have tried above to explain more clearly what I mean. I am, I have to say, getting a little fed up with this argument that I am being “confusing”. It’s as though I am “cheating” by being a bit too clever!

Well, it does. But it may be an unfamiliar term. By “non-linear” and “chaos” I was referring to the math of “chaos theory” which is a poor term, but which is extremely powerful math – and is the math of systems in which the state of a system is influenced by a previous state of the system (unlike fair coin-tossing where each toss is independent of the previous toss). This regularly gives rise to “non-linear” effects, for example, a small change in the state at the system at Time A can have amplified effects at Time B. And the natural world is full of such systems – weather being a classic example. Try James Gleick’s book “Chaos” which is very readable. And I’m sure one of the mathematicians will be along to make it clearer than I have just done.

Yes, indeed it is. That is because Darwin had the crucial insight to see that the prevalence of a trait in the next generation was dependent on its usefulness in the previous – in other words, though he did not have the math to express it – it is a chaotic, non-linear, feedback system.

Yes, it is, absolutely. Darwinian (not just “neo-Darwinian”) says exactly that – that useful features arise from a chaotic system – where “chaotic” in this context means something very precise – a system with feedback loops, and thus the capacity for highly non-linear changes. Not just that, but a system with what are called “attractor basins” – which “guide” populations to optimum fitness within the current environment.

Certainly I could try to be more concise, but my terms, I insist, are not irrelevant, and the lengths I go to is to be precise, at the cost, I fear of being concise. I will try to combine precision with concision more effectively! At least I provide pictures 🙂

But please do not confuse precision with “inapplicable nonsense”. I can give you any number of applications of what is most certainly not “nonsense” here, and indeed have done in my post to you above. The devil is certainly in the details, but if you do get to grips with chaos theory, or even with my relatively simple examples in the OP, it should start to make some sense.

No, I don’t believe so. There are many things I don’t understand, because I do not have the technical expertise to do so. That does not give me the right to dismiss them as “inapplicable nonsense”. But I do not think anything I have written is beyond anyone with normal intelligence to understand, with a bit of effort. I managed to understand it myself, and I do not think I am particularly brilliant, although I do have a reasonable science and math training.

But effort is most certainly required, and I do find myself somewhat annoyed when people who honestly (and commendably) say they do not know the relevant math, nonetheless abrogate to themselves the capacity to say that it is nonsense.

Sure, some things look mathy and aren’t. Tbh, I’d say that a lot of ID output is like this. But you need some math skill to see the cracks. Until you have those, you won’t know whether mine has cracks or not. When you do, let me know what is wrong with my math. Until then, I ask you not to assume that it is wrong.

So are bullshit non-answer smokescreens used to avoid questions about one’s claims that one can’t answer.

phoodoo, have you figured out what a fair coin is yet? 🙂 Here is some reading material for you. It’s not really that complicated.

I have shown my work, in this OP, William, and explained it in detail to Phoodoo, above (I didn’t in the OP, as I hoped people would rise to the challenge of inferring the processes that had generated each distribution).

Sometimes, I have noted, the person simply assumes knowledge that their discussion partner does not have. This is not an “education bluff” – it’s simply a mistaken in evaluating the technical knowledge of the person they are talking to. By assuming an “education bluff” you are missing the possibility that there is no bluff, merely an “education gap”. It is also, as it happens, against the rules of this site! If you think someone is bluffing – ask what they mean. You may find they aren’t.

As I have now discovered that you seem very confused about the nature of null hypothesis testing, I will make sure I am very explicit when I next refer to it. But in the mean time, William Dembski makes it very clear here.

And your example is a fine example of self-refutation! You have just made an error in logic, precisely because you lack (apparently) the relevant education. Natural selection simply does not require that a flagellum (or any other feature) functions as a flagellum before it is one. All that is required is that the precursors confer some benefit, which was exactly Pallen and Matzke’s point. Moreoever, as I say in my more recent OP, we now know that drift is also important, and that IC features can evolve, in principle, with not only many neutral intermediary steps, but with actual deleterious intermediary steps. This is what the Lenski AVIDA paper showed. Logically. IC is simply not a bar to Darwinian evolution, and certainly not a bar to Darwinian evolution plus drift. So Behe was indeed refuted, logically. But your lack of relevant expertise has prevented you, apparently, from seeing that logic.

What is the difference between “could have” and “plausible”? Pallen and Matzke provided a perfectly plausible (though not necessarily correct) pathway. That means the claim that the flagellum “could not” have evolved by Darwinian pathways is falsified. Possibly it “did not” but it is false to say that it “could not”. And in fact, Pallen and Matzke’s hypothesis makes further testable predictions.

Apparently not.

Apparently WJM’s psychic powers that allow him to recognize “logic errors” in scientific analysis when he sees them despite his technical naivete are the same ones that allow him to recognize “design” when he sees it. He’s just as successful in both.

Then what “drives” evolution? The only answer you have is chance.

Define “drives”. Evolution is a process of which chance is but one part. The process taken as a whole is what “drives” evolution.

Blas,

Yes, evolution is driven by a random process (genetic variations) and is steered by a deterministic one (natural selection).

The deterministic one seems very ramdom too. As drift and any type of mutations can give a positive resut. It is not unpredictable a synonime for chance?

This is a contradiction in terms. Random is the opposite of deterministic. Selection acts in a predictable way, preferring certain outcomes.

Take the fair coin process. Without any selection, you will get equal numbers of heads and tails. Now add selection: accept all heads and only half of tails. The resulting process will be a biased coin: it will have two heads for one tail after a large number of throws.

Yes intelligent selections takes heads only. What NS does? WHen it works, because manu¡y times it do not as in drift, it takes what has “reproductive success”. And what has “reproductive success? Well it depends. we do not know. we can`t predict. It is matter of chance.

At UD, WJM asked:

Alan Fox responded:

WJM asked:

Alan Fox responded:

As I pointed out there, your response was a sequence of non-sequiturs. Real vs Imagined? We don’t know if intelligence is real? Crop circles are made by people but we don’t know how intelligent they are? A fluke whirlwind might cause a “round depression” in a field?

Yes, your answers there were utterly consistent; but writing what they were utterly consistent with would no doubt land this post in guano.

Which is why I appreciated your remarkably straightforward answer above. But, perhaps you could clear something up from me: why did you add the caveat

Do you think imaginary beings can generate configurations of matter? Or, perhaps you mean to use the term “hypothetical” instead of “imaginary”?

If so, rest assured, I’m making no case for who or what created any particular configuration of matter, other than that they were an intelligent designer.

Indeed, one of the issues I’m hopefully putting to rest here (among others) is the notion that any information at all about the designer is necessary, at least in some cases, to know to a high degree of certainty that a particular configuration of matter is the result of (categorical) intelligent design.

If we can agree (as Liz has, in the alien intelligences on the moon scenario described upthread) that we can expect alien intelligences to recognize the lunar rovers, etc., as intelligently designed objects, and that regardless of the who or what or how of crop circles, stonehenge, etc. we can have a high degree of certainty they were intelligently designed, and even if we found the Liz message in ancient ant DNA with no viable explanation as to what, who or how, we would still know to a high degree of certainty the message was intelligently designed. No formal null testing required.

At that point we can agree (at least for the sake of argument!) that there exists some kind of actual commodity that can be configured into a material medium only by an intelligent agent (plausibly speaking) that is fairly easily recognized by other intelligent agents as the work of another intelligent agency, even if the only thing they have to go on is that particular configuration of matter.

The pertinent questions become (and should be), when dusted off from all the anti-ID blocking and bluffing, what is that commodity? Can it be formally quantified? Why is it recognizable? Why would we expect non-human intelligences to be able to recognize it, and why would we expect to be able to recognize non-human configurations that employ that commodity?

Of course, not all intelligent designs employ deploy that particular commodity, or deploy it in sufficient (quantity? quality?) to be easily recognizable. But some designs certainly have it to the degree that denying the thing is intelligently designed becomes an implausible absurdity.

What is that quality/characteristic?

Of course not all of her examples are completely stochastic processes. If you randomly roll a pair of dice, and use a computer to delete every result of the dice except when you roll a six and a four, and then say, these rolled dice only always end up with a sum total of 10, that is not a stochastic process, no matter how badly you want to butcher the meaning of words.

Your error in logic is that I made such an implication I did not. You are so utterly mischaracterizing what I said it’s jaw-dropping. I didn’t say natural selection requires a flagellum to function as a flagellum before it is one. How absurd! I said that natural selection cannot select on the basis of a functioning flagellum before there is a functioning flagellum. Natural selection doesn’t select “for” any future use; it only selects what is currently useful or of benefit (or neutral). A flagellum that is missing a single part is not a flagellum waiting for a part; it’s something else entirely under Darwinian evolution. NS only selects what is, not what will be 2 or three added parts down the road.

You are committing the same blatant error of logic Behe and others have already rebutted by pointing out that their conception of IR is a straw man. Nobody denies that it is possible for evolution to generate the parts of what will eventually construct a working flagellum because of advantages they confer for non-flagellum reasons (or just happen to be lying around neutrally); that’s not the point. The flagellum, like a mousetrap, is a factual irreducibly complex system. That fact has nothing whatsoever to do with evolution; it’s an analysis of the parts and their necessary arrangement for the function.

The point Behe makes about IR being problematic for undirected evolution (not whether IR systems exist at all in biology – of course they do) is that their creation without teleology is implausible, not impossible.

William J. Murray,

How do you measure this ‘implausibility’? The essence of the bacterial flagellum is a proton-driven rotational motor, presumably an ATPase. ATPases and proton gradients, running in both directions, are involved in fundamental energy conversion and transport at the cell surface, so these are plausibly available to non-motile cells. I don’t see a fundamental implausibility in the co-option of one of these basic systems to spin a short protein rotor, which subsequently elaborates into the modular modern structure of flagellin subunits. How well does a propulsion system have to work before it is a propulsion system? I’d say, logically, it just has to move the critter.

I would say that their creation is not only implausible, but most definitely impossible without teleology. The mousetrap argument is so illogical its beyond funny. The spring has some some other purpose before. And the hook had some other purpose before. And the the arm which snaps back and breaks the mouse’s neck had another purpose before. And the screws which hold the spring to the board had some other purpose before. And springs and hooks and screws and swing-arms and platforms are appearing at random all the time in the organism, and sometime they hang around, or maybe just drift along through the population because they don’t kill it, and then bingo, one day they all get rearranged, in exactly the right order mind you, and the hook get placed here, and the swing-arm there, and the spring gets tensioned there, and by golly, you know what, I think I can figure out a way to use this dam thing strapped to my back. I survived for a million years without this little mousetrap, but heck, this way is even better, I am going to use this thing like crazy now, forget the old way I lived, this is awesome!

And that as I said before is why some kid is going to be so lucky to be born with an O ring gasket on his forehead, because one day his great great grandkids are going to need that as the last crucial piece to migrate into their little jet packs to keep it from leaking so they can use it to fly to mars. If only they can make sure to be lucky enough that the randomly mutated perfectly conical, heat shielded, graduated nozzle with the right size gas pressure relieve valves and reverse threaded fuel canister connecting arm, with gps wires going into the central computing relay switches, is pointing the right direction to keep them from being shot into the ground, and ALSO hope they keep getting laid in the process.

Lucky lucky bastard.

In order to demonstrate the plausibility of the construction, over time, of what ends up being a functioning bacterial flagellum, it is not enough to show that each individual part of the flagellum merely existed before the flagellum; the Darwinist must argue the construction of the flagellum in terms of non-flagellum benefit. Whatever one starts with as their previously functioning, selectable base of future additions/alterations, every increment thereafter must provide a non-flagellum benefit until the last addition/alteration results in a functioning flagellum.

This is one of the reasons that many programmers, engineers and designers find the theory of evolution absurd at face value:

1. Designing and building a functioning device like a flagellum is quite a task;

2. Now attempt to do it starting with something that has an entirely different function,

3. Then attempt to add to that original device parts not specifically designed to attach to that device

4. Nor to meet any additional or future specifications required either by the next function acquired or by your ultimate target device/function,

5. that were being used in other kinds of devices for other purposes

6. that confer new, beneficial functions each time you do this (each evolutionary step towards a functioning flagellum)

7. Even though none of the added parts were designed to fit nor to meet the demands (specifications) of any of the newly acquired functions along the way.

It’s possible that an intelligent designer could do this, although I doubt you’d end up with anything useful for very long. Most likely, without parts specifically designed to fit and meet demand specifications for the new functions, the device would very quickly break down (if it ever functioned at all for any duration that could be selected for).

High-energy torque systems like the flagellum, in the real world, require specifically fitted parts designed to meet workload specifications. You cannot even intentionally (much less blindly) cobble it together with spare parts in the first place – not without deliberately machining fitting nodes and adding infrastructural supports specifically for the purpose in mind.

I don’t have a problem with that.

There is sufficient teleology in homeostatic processes, to account for biology. And homeostasis is naturally occuring — it can be found in weather systems.

You’d have to ask him. It’s his argument. I can as easily ask how Darwinists measure the plausibility of their side of the claim about the origination of the flagellum?

The particular point I’m making here is that one can find basic logical flaws in technical arguments even if one is not well-educated in that particular field. Liz and other Darwinists have argued a straw man version of the IC argument for a long time. It has nothing to do with whether or not Darwinistic forces “can” deliver the parts and build an IC system, or if one can find where the parts were useful elsewhere, but whether or not non-teleology is a scientifically plausible categorical explanation for the construction of such IC systems.

William J. Murray,

And the problem with that is that basic combination of ignorance and arrogance. You have declared flagellar evolution implausible without any apparent knowledge of bacterial biology or the relevance of protein homologies and exaptation. So how well placed are you to make that assessment? You defer to expertise – but only Behe’s. Everyone else is undertaking an Education Bluff, or a Literature Bluff.

http://www.pnas.org/content/104/17/7116.full

A scientifically plausible categorical explanation? What a confuson of concepts!

Well, if a scenario is proferred which scientists well-versed in the subject find plausible, and which can be subject to at least some empirical investigation, by whom is ‘non-teleology’ to be adjudicated implausible? The rubes? The fact that modern flagellar systems can be fully disabled by removing any individual part is tediously irrelevant to the possibility of their construction by evolutionary process. A logician like you should be able to spot how such a situation could logically arise. Yet you apparently refuse to investigate that avenue.

If the IC argument has been misrepresented as “removing any part disables function, therefore it is a problem for Darwinism” – is a straw man – then what is the correct version?

phoodoo,

Well, I think the argument is that it is so implausible that it is for all intents and purposes “impossible” – but, there are no physical laws such a convenient arrangement of parts would violate.

I think that the main problem most anti-ID advocates have is that they are willing to embrace bare possibility as sufficient, as long as they can avoid ID. Back in Darwin’s time, when a cell was thought to be basically nothing more than a cell wall, a nucleus, “protoplasm” and maybe the ribosome, it was perhaps plausible to consider that there was some inherent quality about “protoplasm” that would accommodate the Darwinistic scenario. The problem is that we now know what is in that protoplasm, and the non-teleological scenario can only be clung to by those willfully ignorant of what it takes to engineer functioning, useful machines in the real world, which inhabit that protoplasm in microscopic droves and all work in amazing, organized unison.

Where did I do that?

That a thing is logically possible doesn’t make it logically plausible. The onus is on those who claim it is plausible to present their argument. An argument that it is possible is not sufficient to demonstrate it plausible.

I agree that it is possible for darwinian processes in theory to construct a functional bacterial flagellum; I’m not familiar with any arguments that it is plausible. I’m only familiar with arguments that it is not plausible. Please direct me to arguments that it is plausible.