As The Ghost In The Machine thread is getting rather long, but no less interesting, I thought I’d start another one here, specifically on the issue of Libertarian Free Will.

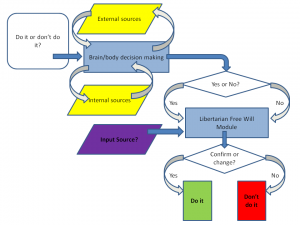

And I drew some diagrams which seem to me to represent the issues. Here is a straightforward account of how I-as-organism make a decision as to whether to do, or not do, something (round up or round down when calculating the tip I leave in a restaurant, for instance).

My brain/body decision-making apparatus interrogates both itself, internally, and the external world, iteratively, eventually coming to a Yes or NO decision. If it outputs Yes, I do it; if it outputs No, I don’t.

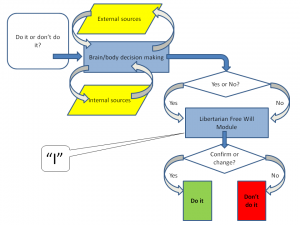

Now let’s add a Libertarian Free Will Module (LFW) to the diagram:

There are other potential places to put the LFW module, but this is the place most often suggested – between the decision-to-act and the execution. It’s like an Upper House, or Revising Chamber (Senate, Lords, whatever) that takes the Recommendation from the brain, and either accepts it, or overturns it. If it accepts a Yes, or rejects a No, I do the thing; if it rejects a Yes or accepts a No, I don’t.

The big question that arises is: on the basis of what information or principle does LFW decide whether to accept or reject the Recommendation? What, in other words, is in the purple parallelogram below?

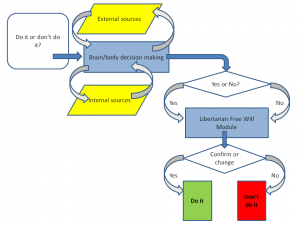

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

If the input is some uncaused quantum event, then we can say that the output from the LFW module is uncaused, but also unwilled. However, if the input is more data, then the output is caused and (arguably) willed. If it is a mixture, to the extent that it depends on data, it is willed, and to the extent that it depends on quantum events, it is uncaused, but there isn’t any partition of the output that is both willed and uncaused.

It seems to me.

Given that an LFW module is an attractive concept, as it appears to contain the “I” that we want take ownership of our decisions, it is a bit of a facer to have to accept that it makes no sense (and I honestly think it doesn’t, sadly). One response is to take the bleak view that we have “no free will”, merely the “illusion” of it – we think we have an LFW module, and it’s quite a healthy illusion to maintain, but in the end we have to accept that it is a useful fiction, but ultimately meaningless.

Another response is to take the “compatibilist” approach (I really don’t like the term, because it’s not so much that I think Free Will is “compatible” with not having an LFW module, so much as I think that the LFW module is not compatible with coherence, so that if we are going to consider Free Will at all, we need to consider it within a framework that omits the LFW module.

Which I think is perfectly straightforward.

It all depends, it seems to me, on what we are referring to when we use the word “I” (or “you”, or “he”, or “she” or even “it”, though I’m not sure my neutered Tom has FW.

If we say: “I” is the thing that sits inside the LFW, and outputs the decision, and if we moreover conclude, as I think we must, that the LFW is a nonsense, a married bachelor, a square circle, then is there anywhere else we can identify as “I”?

Yes, I think there is. I think Dennett essentially has it right. Instead of locating the “I” in an at best illusory LFW:

we keep things simple and locate the “I” within the organism itself:

And, as Dennett says, we can draw it rather small, and omit from it responsibility for collecting certain kinds of external data, or from generating certain kinds of internal data (for example, we generally hold someone “not responsible” if they are psychotic, attribution the internal data to their “illness” rather than “them”), or we can draw it large, in which case we accept a greater degree (or assign a greater degree, if we are talking about someone else) of moral responsibility for our actions.

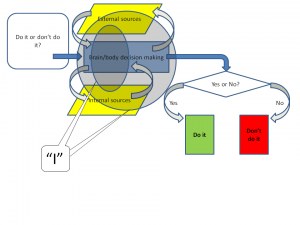

In other words, as Dennett has it, defining the the self (what constitutes the “I”) is the very act of accepting moral responsiblity. The more responsibility we accept, the larger we draw the boundaries of our own “I”. The less we accept, the smaller “I” become. If I reject all moral responsibility for my actions, I no longer merit any freedom in society, and can have no grouse if you decide to lock me up in a secure institution lest the zombie Lizzie goes on a rampage doing whatever her inputs tell her to do.

It’s not Libertarian Free Will, but what I suggest we do when we accept ownership of our actions is to define ourselves as owners of the degrees of freedom offered by our capacity to make informed choices – informed both by external data, and by our own goals and capacities and values.

Which is far better than either an illusion or defining ourselves out of existence as free agents.

My argument is philosophical, not biological. How human beings function at a neurological level is irrelevant to that argument.

The biological aspect of the LFW vs BA argument is irrelevant; either we have a will that is caused, or we do not, regardless of what terminology one uses to describe either side of the argument. If our will is caused, we cannot will anything other than what a caused “we” are in turn caused to will. If will is autonomous, we cannot be caused to will anything, and thus an essential part of “we” is uncaused, and appreciably immune to causation, which makes it irreconcilable with an entirely materialistic cause-and-effect worldview, where all causes are themselves caused, or are random.

The argument is not about biology or terminology, but rather flow from: GIVEN LFW, or GIVEN non-LFW, what then?

Then bullshit.

Deriving logical consequences requires very precise definitions. You cannot derive any logical consequences from the hand-waving pseudo-definitions given for free will.

The definition of caused vs uncaused is as precise as the argument I’m making requires. No matter how complicated, ambiguous, chaotic, random, or unpredictable you describe that which causes will, it is still caused under any materialist paradigm.

What precisely causes it, and precisely how, is entirely irrelevant to any point other than that of obfuscating the actual argument at hand.

William,

That’s right. We do not have the freedom to choose our selves, and that remains true even if materialism is false. You cannot choose to be Gandhi. You cannot choose to be Dahmer. You can only be William J. Murray. Your acts are constrained by your nature.

However, that constraint is the very source of your freedom. If you were unconstrained by your own nature, then you would be equal parts Gandhi, Dahmer, and trillions of other people. There would be no pattern to your choices. You would be randomness personified, which isn’t freedom at all.

Libertarians hope for a kind of “freedom” that banishes the self as a causal agent.

Libertarian free will is incoherent.

William,

Not true. We don’t require access to the absolute, and we don’t need to pretend that we have access to the absolute.

Whether you are a materialist or not, it is obvious that

1) we certainly don’t have access to the absolute 100% of the time, since we make mistakes;

2) we might not have such access at all;

3) if we do have partial access, we have no reliable way of determining when we have access and when we don’t; so

4) the situation is no different from having no access at all. In any given instance, we might be right or wrong, and thinking about it with our imperfect minds is the only way we can decide.

P.S. You’re misusing the word “supervene”.

When you are not functioning at a neurological level, your “philosophy,” as you like to mislabel your assertions, is irrelevant.

It’s apologetics trying to do science. Often tried, never works.

It’s no different from trying to determine the shape of the earth from pure reason, or the orbits of the planets.

There is a reason, William, why science is iterative and collaborative.

Or the FSCI/O of a Flagellum that came together totally at random in a tornado. Yet they keep on trying at UD.

I have no problem with the idea (apart from not being sure what “BA” stands for, but I guess something like “Brain Algorithm”?) that envisaging something like “Libertarian Free Will” is a healthy model. However, because it actually breaks down on analysis, I myself am happier (and healthier) with a “self-efficacy” model in which I define the boundary of my “self” as the degree to which I accept moral responsibility for my actions. I don’t, for example, accept moral responsiblity for what I may do in my sleep, for instance, if forcibly drugged, or even for my inability to remember certain things, as long as I have done due diligence with lists and reminders.

I don’t think the self is an illusion at all, but I think its more satisfying to be able to describe it coherently, and having glimpsed the incoherence of LFW, I can’t take it seriously any more.

BA = “Biological Automata” or there abouts.

Ah, of course, thanks!

Well, I got the gist.

Liz,

While I think that your argument for what you call “self” and “moral responsibility” is flawed, I don’t have much of a problem with technically flawed belief systems that still accept personal, moral responsibility. I think a lot of theists also have technically flawed belief systems and hold a flawed understanding of morality, but at the end of the day, IMO, it doesn’t matter whether or not you believe Apollo pulls the sun through the sky on a chariot or you believe the Earth orbits the sun as long as you can correctly set your clock by it and arrive at all your appointments on time.

Most people accept personal responsibility not because of philosophy, but because it was ingrained by their mother or mother substitute, and reinforced by early social experiences.

There are people who don’t seem to pick this up, but as far as I can tell, such people are not influenced by philosophy either.

Well, I accept that you think it is flawed William (although I’d be interested to know why), but would you agree that LFW is also flawed, as suggested in my OP?

As long as you are living in a bronze age civilization that might be right, but in a technological civilization things like that make a difference.

For example, the GPS system would not work without corrections for general relativity.

And pharmaceuticals could not be made without reference to a material body. Antidepressants and anti-psychotics could not be made without reference to a material mind.

Liz,

Regardless of whether or not LFW is a flawed concept, it is a necessary one, one which you operate under in all of your activities, whether you agree to it or not, and whether you can make sense of it or not.

It’s like denying the rules of thought (fundamental principles of logic); you cannot do so without employing them. You cannot deny LFW without necessarily implying it exists. You cannot argue against it without employing it as the basis for your argument, even if you cannot see it.

One can use whatever semantic arrangements they want, or employ whatever rationales make them feel intellectually fulfilled about their concept of what will is and how it works in their system, but all of that still relies upon LFW as a causally anomalous, materially autonomous agency.

There’s just no way around it.

The same is true of the assumed existence of an absolute arbiter of true statements, and the assumption that humans have autonomous access to it; every argument one makes that they consider sound and convincing depends upon those assumptions, whether one admits it or not, whether one can see it or not. The form and structural assumptions of every such argument is a refutation of the very butterfly wind and pizza worldview such arguments advocate.

One can make absolute statements about Platonic abstractions, but not about real things.

Is that an absolute statement about what can be said about real things?

Sure. Why is that a problem?

William,

You are arguing that coherent action requires an incoherent premise. Doesn’t that strike you as a bit… odd?

But no, LFW is not an inevitable assumption. My actions are my own, my thoughts are my own, my choices are my own, and none of that depends on assuming that those things are completely unconstrained and uncaused.

Sure you can. It is quite possible to think, deny, and argue even if thinking, denial, and argumentation are completely caused or even completely determined.

You may believe that valid thinking, denial and argumentation are possible only if they are “materially autonomous” (which is also incorrect), but that is a separate issue from whether they are possible at all.

Because you want to eat the cake and have it.

You want to keep your “materialistic model of reality” and the “moral responsability”. As one contradicts the other you invent some words that avoid to say that “moral responsability” is an illusion as the “materialistic model of reality” demands.

In that way you live happy with two contradictories models the “moral responsability model” in order to acept as not an illusion when your son sayd “I love you mom”, and the “materialistic model of reality” in order to feel a good scientist.

William,

Here’s an experiment that will show otherwise. For the next hour, I’d like you to assume that you do not have “autonomous access” to “an absolute arbiter of true statements”.

If you undertake this experiment, you will find that you can still think, reason, and argue. The only difference is that you will no longer claim absolute certainty (which you shouldn’t be doing anyway, given the obvious fact that humans are fallible).

Any thought, any chain of reasoning, any argument you make might be mistaken, because you do not have access to the Absolute Arbiter. So what? It’s already true that you might be mistaken, because you, like all humans, are imperfect. Nothing changes.

You have not demonstrated that it is a necessary concept. I suggest that what is necessary, or at least beneficial, is a sense of self-efficacy. There are alternative models of I-as-agent that make at least as much, and I would argue more, sense than “LFW” which is incoherent, as I have demonstrated (an action cannot be both willed and free of constraint), for example, the model that there is an entity, the organism known as “Lizzie”, who makes decisions, and ascribes them to the will of an agent she refers to as “Lizzie”.

You are defining self-efficacy too narrowly, i suggest – indeed, I suggest you are defining the “self” far to narrowly. An entity that actually encompasses the decision-making apparatus we are blessed with makes a lot more sense, than one deprived of constraining information, and free-er.

Well, I wouldn’t know, because I don’t know what “denying the rules of thought” actually means.

Of course you can. I denied it in my OP, and did not imply that it exists. Indeed I did more than “deny” it – I demonstrated that it is an oxymoron- a square circle. It cannot exist.

No, it doesn’t. It can rely on something much more dependent because actually non-contradictory: the idea that the thing I refer to as “I” is the organism-known-as-Lizzie, equipped, inter alia, with a brain. Not some causeless cause that makes necessarily uninformed decision.

Well, there is, and it’s the road you have to take, because there’s it turns out there’s no way through it.

Next, William is going to tell us that he believes in Santa Claus and in the Tooth Fairy.

This strikes me as the same old map-mistaken-for-the-territory canard that a lot of creationists use to defend the existence of God. The old saw goes something like, ‘in order to deny God you have to posit the existence of a god with all the characteristics and baggage I conceptual said God having in order try to establish it does not exist. Therefore you are establishing as true the very thing you are attempting to deny.’

Of course there clear problem with such a charge is that people can, quite easily, discuss a concept hypothetically and model the conceptual parameters without ever having to accept the concept’s actuality.

It’s like putting an idea into a test environment and recognizing that the the test environment is then quite different from how the production environment actually operates. Ergo, the idea doesn’t exist in the production environment.

Of course you don’t have to accept it; you don’t have to accept the necessary validity of the LNC, but every word one writes, and every point one makes, is drawn from the premise of the LNC being necessarily valid, even if the person making the argument doesn’t realize it.

William J. Murray,

Here is an experiment which you should do under careful, medical supervision.

In the presence of a number of doctors and a full complement of recording equipment and a crew of operators, strip yourself naked and place yourself inside a refrigerated meat locker until your core body temperature reaches somewhere around 60 degrees Fahrenheit. Now try to exercise your “libertarian free will.”

If you don’t like cold, place yourself in a sauna and get your core body temperature up to about 106 degrees Fahrenheit. Try to exercise your “libertarian free will” now.

Since you don’t appear to notice physical evidence even at normal temperatures, it will be certain that you will not notice such things outside the normal temperature range. That is why you will need those doctors and recording equipment around you when you do the experiment. At least showing physical evidence to someone else will be convincing to them.

These little experiments will demonstrate to those watching you that your “libertarian free will” is temperature dependent; there is just no way around it.

That’s also how KF “wins” his “arguments” over at UD.

I don’t think this is true, William.

As I said in my post on “the laws of thought”, I suggest that the vast mojority of what we think and say is probabilistic – Today will probably be fine, but there’s a slight risk of showers around midday, so I’ll take my umbrella. This debit card is probably valid, unless my salary hasn’t been paid to my account for some reason, so I’d better take my credit card in case.

In other words, most statements are neither true nor false, and instead carry a explicit or implicit assumption of uncertainty. And much decision-making is about minimising the consequences of error rather than trying to be correct.

Anyway, rather than pursue these rabbits down rabbit-holes: William, can you explain how a decision can be both uncaused and informed?

There are some concepts that cannot be “demonstrated” because it is through and by those concepts that anything whatsoever is “demonstrated”, shown, or proven. For example, the LNC cannot be proven, because it is the concept by which things are proven, or by which things are demonstrated.

But the LNC is only part of the necessary trio of fundamental conceptual elements required for anything to be “demonstrated” or “proven” or “shown”; second is an observing entity that has the free capacity to be shown, to understand, to not be bound to whatever butterfly wind and pizza determines, but to be free of such material programmed constraints to make deliberate (not programmed) conclusions; and third, the absolute arbiter – the assumption that there is a true statement that can be discerned, not a statement that just happens to identified as true by the happenstance programming of butterfly wind and pizza. This assumption requires that an arbiter of such truth exist, and that humans have the capacity to access it.

These assumptive concepts cannot be demonstrated necessary, because they are that which make such demonstrations possible; they are that which demonstrates, and that which receives the demonstration, and that which discerns true statements concerning any demonstration.

All worldviews rest upon certain faith-based assumptions that cannot themselves be proven or demonstrated; LFW is one of them. whether one admits it (even to themselves) or not. The LNC is another, whether one admits it or not. The existence of an absolute arbiter is another – whether one admits it or not. Without them, there is no conceptual basis for any argument, and there is no reason to argue; there is no ability to discern the value of any arguments; and there is nobody available to hear and assess the argument.

IF LNC is not valid, the words and phrases cannot be assumed to have any specific meaning by which arguments can be made and understood. If there is no absolute arbiter of true statements, then all arguments amount to subjective rhetoric and manipulation, because “truth” is just whatever people agree it is. If there is no LFW, then all arguments are nothing more than butterfly wind and pizza in the first place, and all we understand and think of them is butterfly wind and pizza in the second place.

So no, the LNC, LFW and AA cannot be demonstrated to be true, but every non-rhetorical argument is conceptually predicated on them being true, just as those arguments are predicated on the concept that solipsism is not true, that we are not Boltzmann Brains.

Nobody argues for subjective truths – there’s no reason to insult other peole or get pissed off or attack whole groups of people for believing something we don’t if, deep down, we thought we were just arguing for a subjective, butterfly wind and pizza truth. Nobody argues for “social truths”, as if what we are arguing about is only valid in our particular society at this particular time. Nobody argues as if the other guy is going to believe whatever his particular brand of butterfly wind and pizza makes him believe. Nobody argues as if whatever the believe just happes to be whatever their brand of butterfly wind and pizza happened to generate.

Everyone here argues as if what I believe (LFW) is true, and as if what they are arguing for (butterfly wind and pizza) is false.

Here is another exercise at which you will almost certainly fail.

Will yourself to learn some real science.

The fact that you can’t is pretty strong evidence that you don’t have the free will that you claim to have. You are chained by dogma.

“My local sports team is superior to your local sports team.”

You keep repeating these assertions, William, with no other justification than the additional assertion that we have to assume they are true.

I’m not buying it.

Could you please address the argument I offer in the OP, namely, that a decision cannot be both uncaused and informed?

I wonder what William has been smoking. Whatever it is, it seems to be a powerful hallucinogen.

No they aren’t, and no they aren’t arguing for butterfly wind and pizza.

I’m arguing that the thing I call “I” makes willed choices from a range of option, by virtue of the brain and body I call “mine”.

I’m not arguing “as if” I have an uncaused but nonetheless miraculously informed W hovering near my brain, acting as a revising chamber to my brain’s decisions, because I don’t think it’s true.

The thing I call “I” is the organism, Lizzie, when she’s awake, and alert enough to weigh the likely consequences of alternative courses of action to herself (that’s lizzie, the organism) now, and in the future, and to others.

None of that is butterflies, wind or pizzas, although the processes by which she arrives at those decisions are deeply non-linear.

William,

You are obviously attached to your assumptions. You might even regard them as emotionally necessary. However, they are clearly not logically necessary.

As I pointed out above, you can demonstrate this yourself. Take any assumption you regard as logically necessary. Assume the contrary, and see what that implies. If nothing “bad” happens, then the assumption wasn’t necessary after all.

You claim that we must assume that we have “autonomous access” to “an absolute arbiter of true statements” (henceforth ‘AA2AA’). Let’s assume the contrary and see what happens.

If we do not have AA2AA, then we have to rely on our own imperfect judgments of what is true and what is false. We might be mistaken, even about those things of which we are certain or almost certain.

Others may come to different conclusions. They might be mistaken. We might be mistaken. They and we might be mistaken.

We may try to persuade them of our views, using our imperfect faculties of reason and argumentation. They may do the same toward us. Even if we end up in agreement, we will never know, in the ultimate sense, who is right.

Now read over those three paragraphs again and ask yourself how they differ from the status quo. The answer, of course, is that they do not.

By assuming the contrary of AA2AA, we have lost absolutely nothing in logical terms. It was not a necessary assumption.

You, however, have lost the thrill of feeling that your cognitive faculties are plugged directly into the Divine. You may regret that, and you may even decide that it serves your emotional needs best to go ahead and make the unwarranted assumption. That’s up to you, but don’t pretend that your assumption is logically necessary. It clearly isn’t.

Also, your repeated references to “butterfly wind and pizza” are tendentious.

Computers are sometimes perturbed by inadequate supply voltages or alpha particles, but that doesn’t make them unreliable or useless. We can and do trust computers — just not unconditionally.

Likewise, brains are sometimes perturbed by factors such as what we’ve had to drink, but that doesn’t imply that “all arguments are nothing more than butterfly wind and pizza”, as you claim. We can and do trust our cognitions — just not unconditionally.

You should try cultivating some uncertainty. It has a salutary effect on one’s thinking.

Information may be necessary for particular decisions but that doesn’t mean the information is sufficient for the decision. Being informed doesn’t mean the decision is caused by the information.

Suppose the information is sufficient for the decision. Can you explain how the decision is both uncaused and yet informed?

To the extent that a decision is caused, it is determined. To the extent that it is uncaused, it is random.

Neither of these leaves room for libertarian free will. It is an incoherent concept.

Let me strip the above of the stolen concepts (semantics you are using to make BA appear to be the same as LFW: ”

That’s all you have available as “I”, “will”, “choice”, “decision”, true, false, correct, incorrect, fact, fiction, reason, madness. It’s all the same; it’s whatever the computation happens to compute. You have no presumed means of escaping absolute self-referential incoherence; you only hide it with semantics not meaningfully applicable under your worldview.

Part of Lizzie’s computation is deciding what she wants (her will). Another part of her computation enumerates the options open to her. Another part considers those options and their likely consequences. Still another part of the computation evaluates those consequences and determines which option would be best. Still another part puts her choice into effect.

You can label all of these as computation, and I won’t disagree with you. However, I won’t disagree with Lizzie either if she labels them as “will”, “foresight”, “choice”, “decision”, “implementation”, etc.

Both perspectives are correct. All of those things are examples of computation.

Now, instead of just reiterating your assertions ad infinitum, how about responding to our criticisms of them?

Sure it is. You already agreed to this, that the material computation is chaotic (butterfly wind) and unpredictable, from both external and internal non-linear factors (the pizza you ate last night); which could be what tips one from belief A to belief B. You cannot say that a butterfly wind was not the last, necessary component that gave sufficient cause for you to believe in materialism – or that a pizza you ate the night before might be the accumulative, final cause.

Under materialism, what you think and what you believe and what you conclude can result not just fro evidence and arguments pertinent to the issue, but from chaotic, non-linear, unpredictable sources – like butterfly wind and pizza. There is no “you” that can override such determining effects if they happen, because “you” are nothing but the computation itself. You **are** whatever butterfly wind and pizza says you are.

If your particular accumulation of butterfly wind and pizza – meaning, the material computation that is called “Liz” – computes output X in terms of written words and beliefs and sensations about those words, that’s all Liz can do, and all that Liz is, whether the words are rational or nonsense.

Furthermore, under your paradigm, whatever you think “evidence” is, whatever you think “reason” is, and however you apply it, and whatever you think and feel about how you apply it, and what you conclude and how you feel about your conclusions, and that of others, is just a result of the material computation. There is no “error check” outside of the system; there is no “objective” analysis. There is no presumed absolute measuring stick to gauge by. It’s all the relative, happenstance mish-mash of interacting butterfly wind and pizza computing outputs.

Material computations are not engineered to produce “true” statements in any meaningful sense; material computations produce statements along with the belief that they are true or valid, whether the computation is labeled a religious zealot or a materialist physicist or a criminally insane madman. The idea or feeling or thought that a belief is true or valid is just computed output. Nothing more or less.

But, you cannot argue that way, or exist that way, which is why you cannot argue materialism in its native language. The native language of materialism is self-referential nonsense – so, materialists must steal the language of dualists – trialists – theists to make arguments that don’t even matter under materialism.

If the computation is really chaotic, non-linear and unpredictable, then why are you even offering arguments as if you can predict what the conclusion of such arguments should be?

No, every one of you is arguing as if **everyone** should conclude, from those arguments, the same conclusions you reached, and that those conclusions (and thus adopted worldview components) are the predictable outcomes of such input. You’re not arguing **as if** a huge collection of unpredictable, chaotic, non-linear events are necessary to reach such outcomes; you’re arguing as if there is a linear, objective truth tha can be obtained by free will entities not bound to their particular chaotic, unpredictable computations.

You might as well just throw out random comments and stories. You might as well just type in random sequences of keystrokes; who knows what unpredictable element might generate the conclusion you wish someone to reach? Why not build an altar to Superman and pray? It has at least as much chance as butterfly wind and pizza to affect the conclusions of others through non-linear, unpredictable chains of events.

Right?

It’s getting pretty bizarre and repetitive. He doesn’t appear to sense anything except what is going on inside his head.

This is clearly not worth following.

If you are, and say, and do **whatever** the computation of physics commands, and I am, and say, and do **whatever** the computation of physics commands, why are you even arguing with me? What’s the point? I will believe whatever physics commands. I wil behave however the computation of physics commands. Who knows what nonsensical beliefs might be required (in a non-linear, chaotic, unpredictable way) for some great invention or advance?

Why are you trying to talk me out of the very beliefs that the computation of physics (under your paradigm) has instilled in this portion of the physical universe? Do you think you can predict how my views are going to affect my life, or the world? Why are any of you arguing against intelligent design, or religious belief in general? Do you believe you can predict the future? Do you believe you can retro-dict what would have occurred without it in history? Can you predict that people will be better off without it, and better off with mateiralist mindset?

The only answer you can have, in the native language of materialism, is that you argue not because your arguments are true, or valid, or will have any particular effect, but only because physics compels you to, whether your arguments are for better or worse, or are rational or nonsensical – you argue for the same reason the leaves rustle in the wind: physics compels it.

So, the reason you make such arguments is not about truth per se, or for any meaningful principle – the wind blew, and you rustle. And that’s the sum total of available reason under materialism, where the reason you do anything is because you were caused to do it.