Recently I described a possible alternative Turing test that looks for certain non-person like behavior. I’d like to try it out and see if it is robust and has any value.

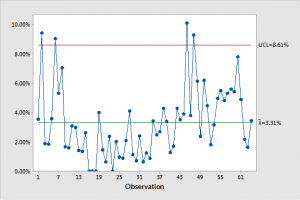

Here is some data represented in an ordinary control chart.

It’s easy to see there is a recognizable pattern here. The line follows a downward trajectory till about observation 16 then it meanders around till about observation 31. At which point it begins an upward track that lasts till almost the end of the chart.

The data in question is real and public but obsolete. I won’t say what it’s source is right now to avoid any bias in your attempts see if we can infer design. I can provide the actual numbers if you like.

The question before the house is. Is the clear overall pattern we see here a record of intention or does it have a “non-mental” cause?

Using the criteria discussed in my “poker” thread I would suggest looking for the following behaviors and excluding a mental cause if they are present.

1) large random spikes in the data

2) sudden changes in the overall pattern of the data that appear to be random

3) long periods of monotony

4) unexplained disjunction in the pattern.

I have some other tests we can look at as well .

What do you say design or not? Are you willing to venture a conjecture?

peace

I don’t know, Suppose we were looking to dig a swimming pool and you had to choose between a shovel and a backhoe.

Which one would the pragmatist choose?

peace

Depends on what the practical considerations are, cost , availability , time constraints , size of the pool, resources, soil , labor availability ,personal whimsy

Neil has already specified he is looking for “what works”.

Consideration is something that persons do. It’s beyond the abilities of non-mental things like evolution. With things like that you would need to specify which “consideration” in advance.

peace

Randomness is useless as a test for agency. As has been explained to you, agents can generate data that is indistinguishable from random. And natural processes can generate data that is highly regular.

The only way to correctly infer agency is when data matches a pattern that you know is the result of agency, and that you know (to the best of your knowledge) does not occur in nature.

I don’t have any problem with the response from newton.

Why does it need to be specified in advance?

One team tries one method. Another team tries an alternative method. These trials go on in parallel. Natural selection takes care of which answer survives. That’s the pragmatic decision making of evolutionary processes, all of it non-mental.

If you can’t get a backhoe into your yard, a shovel is what works. Multiple strageties end up at the same point.

The poker bot chooses between options depending on the opponents play, it learns. The strategies that fail are discarded.

Only if you are seeking a specific goal.

Right so since the pragmatist chooses what works it will choose the shovel in that case.

On the other hand I might choose to renovate the yard before I build a pool.

No it executes it’s program which includes (among other things) changes to it’s strategy depending on what it’s opponents are doing. Computers don’t choose

No computers don’t learn they execute their programs which includes discarding strategies that fail.

“What works” is a specific goal. If you don’t specify you end up with “what happens”

peace

Again we are not trying to decode secret messages. It’s always possible for a person to be able to fool any test if it tries had enough.

“highly regular” will also fail the test.

Again if you want to participate you will need to pay at least a little attention

peace

So how would you say that “pragmatist” choice is different from good ole fashioned choosing that I do? If there is no difference then why the need for a label?

peace

I don’t know enough about your “good ole fashioned” choosing.

I use “pragmatic” because it seems to be the appropriate description. I make a distinction between decisions based on truth and logic alone (algorithmic decisions), and other kinds of decisions which typically have a pragmatic aspect.

So my sine wave oscillator output would be judged as resulting from a non-mental process?

I would find that slightly insulting.

All decisions have a pragmatic aspect there are always trade offs.

Those agents who choose based on truth and logic alone value truth and logic above everything else.

Some one else might value “resources, soil , labor availability personal whimsy etc.”

peace

I value cats. How do cats fit in the pragmatic equation?

I don’t mean to insult anybody but I’d say that the output in question would definitely qualify as the number three non-mental behavior on the list– long periods of monotony 😉

peace

Cats.

What if I modulate the sine wave to make three one-second bursts, followed by 3 five-second bursts, then another 3 one-second bursts, and then that whole pattern repeats indefinitely.

Sounds simple and monotonous enough to be non-mental, right?

It definitely could be non-mental IMO.

I don’t mean to be rude but you need to step your game up a little here. This was all covered ages ago.

peace

Why? How are you applying your “criteria” to make that inference?

Or do you need to revise/update your criteria?

You are the one trying to be scientific and rigorous, so get on with it.

I am getting confused a bit, if you don’t mind could you lay out the hypothesis we are trying to test?

Funny, I though the whole point of the poker test was humans couldn’t do what the bot did.

One gets a pool built ,the other not. If the goal is enlightment, Truth might be pragmatic choice. Logic is always useful.

Depends how picky you are with the definition of “works”. Pretty sure you always end up with what actually happens.

This appears to be a variation of the game. Which wasted weeks of everyone’s time.

It’s a simple Turing test we are trying to determine if the pattern we see in the chart is mental or not.

Just like with any Turing test failing does not mean that the source of the data is not a mind it only means that we could not determine that it was a mind with the available evidence

No the point was that minds behave differently than non-mental processes.

The poker players could mimic the bot perhaps with a bot of their own but even if they did the behavior that resulted would be non-personlike

personal whimsy is not the most efficient way to get a pool built. 😉

The point is agents that can have lot’s of goals besides “what works” that is unless you specify that “what works” is simply what corresponds to my particular goals. If you do that then “what works” is a redundancy

peace

more interesting stuff about mental verses non-mental processes

http://bigthink.com/paul-ratner/automation-nightmare-we-might-be-headed-for-a-world-without-consciousness?utm_source=realclearscience&utm_medium=social&utm_campaign=partner

If you see a pattern, then it is mental — because perception is mental.

This is probably not what you meant. Still, it’s a point that you are missing and is why your tests seem dubious.

Mental verses — what kind of poetry is that?

</spelling-nazi>

You’re assuming your conclusion. What is it that humans do when they change their strategies based on what their opponents are doing that is qualitatively different from what the poker program is doing?

I have written and been responsible for the development of software systems that learn. They change their behavior based on real world input, including feedback on how well their previous decisions worked.

I can write a program that produces different output based on real world input, including feedback on how well the previous output worked. It doesn’t mean the software learned anything.

learn: gain or acquire knowledge of or skill in (something) by study, experience, or being taught.

A software system that gains knowledge from its environment and the results of its behaviors and adjusts its responses to improve its performance at a task is learning, by definition.

If i can write software that learns I’m not sure it’s something worth bragging about. Just sayin’. 🙂

Got that, that is the test. Though the standard structure of Turing test would consist of showing two patterns and determining which was human and the goal of the machine would be to imitate human patterns.

So what hypothesis is this testing? That humans can determine which patterns could only be caused by a human mind at the present time? Is there some practical application the relates to evolution?

So you can only show it is a a human mind, not that it isn’t. That is where my question about the poker game came from. The poker players said humans could not create the behavior.

Except you said human minds could act the same if they choose to

The goal of the bot was not to be personlike, it was to win at poker. And it did.

Pragmatism isn’t always about efficiency, it is constrained by parameters.

It seemed to work well in the poker bot.

A software system does not know anything by definition

Humans choose that is qualitatively different than what the poker program is doing which is executing a program

A river changes it’s behavior bases on real world input but it does not learn anything. Just like the software systems you’ve designed.

That does not mean that rivers and software systems aren’t awesome if just means they are not minds.

peace

That’s why this approach is an alternative Turing test.

We are trying to identify behaviors that are non-personlike and by process of elimination ascertaining those that are are “mental”.

That mental behavior is different than non-mental behavior

I suppose ideally we could see if patterns in nature are similar to those produced by minds.

Of course we could the problem of other minds tells that we could never establish that the driving force behind the panorama of life is a mind just as we can never prove that any other minds exist at all.

No need to worry you are safe 😉

No you can’t ever establish that it is any mind at all. It’s simply impossible to do so. All we can do is establish if it behaves like a mind or not.

Yep we can act like a bot if we want too put in the effort. I’ve often been accused of doing just that here.

The question is can a bot behave like a mind does.

peace

Every kind of choosing is constrained by parameters. How is Pragmatism different?

peace

know: be aware of through observation, inquiry, or information.

aware: having knowledge or perception of a situation or fact.

knowledge: facts or ideas acquired by study, investigation, observation, or experience

A software system that maintains information acquired by observation and experience has knowlege.

You’re just repeating your assertion. How is what humans do when choosing qualitatively different?

People change their behaviors based on real world input. Just like the software systems I’ve built.

You’re still in need of some rigorous operational definitions if you want to have a hope of identifying whatever it is you think is unique about human cognition.

nope,

According you your own definition in order to have knowledge you need to have awareness and software does not have this

It’s not an assertion it’s a definition.

Again awareness read consciousness is qualitatively different than non- consciousnesses or else it would be murder to recycle a hard drive.

So do rivers, yet people are qualitatively different than software systems and rivers.

Come on Patrick think

Once again the whole world is able to function just fine without the sort of definition you are asking for and we have no problem distinguishing between our spouse and our mac book when it comes to cognition

I happen to believe that cognition will forever remain ill defined to some extent.

If you could define it with perfect rigor you could build an algorithm to accomplish it and by definition you can’t do that

peace

here is the raw data behind the chart

wow fifth. Some folks have drunk deeply from the well.

Software systems don’t make observations and do not have experiences. Try again.

Here are the definitions you cut:

know: be aware of through observation, inquiry, or information.

aware: having knowledge or perception of a situation or fact.

knowledge: facts or ideas acquired by study, investigation, observation, or experience

I anticipated that you’d get hung up on the word “aware” in the first definition, so I provided the others to demonstrate that software systems can, in fact, meet them.

I asked “What is it that humans do when they change their strategies based on what their opponents are doing that is qualitatively different from what the poker program is doing?” You replied “Humans choose that is qualitatively different than what the poker program is doing which is executing a program”

That is simply repeating your assertion, not describing what is qualitatively different.

That is non-responsive to the question. I said nothing about consciousness, I asked about choosing. Software systems are capable of deciding among alternatives. What specifically do you see as qualitatively different when humans do so?

How exactly are people qualitatively different from software systems and rivers? Are you able to articulate those differences in both cases?

Let’s refrain from rhetorical devices here. I’ll assume you’re willing to think about these issues.

If you want to make a credible case for whatever it is you’re arguing, you’re going to have to do better than hand waving and invalid analogies.

Again assuming your conclusion. If you aren’t willing to provide clear operational definitions for your terms, why should anyone take your claims seriously? Without those you are literally writing nonsense.

observation: the action or process of observing something or someone carefully or in order to gain information.

observe: notice or perceive (something) and register it as being significant.

experience: encounter or undergo (an event or occurrence).

I have built software systems that do those things. I know of many others that do as well.

Computer systems cannot perceive or decide what will be significant or what will not be significant.

Your claim that observation means to observe just begs the question. Computer systems to not observe. Computer systems are not actors, so they do not perform actions. The claim that computers do things “in order to’ is entirely teleological. And they don’t do things in order to gain information. Not even carefully.

Computer systems do not have encounters.

I have built some that do. Not only that, they learn over time what is significant and when what is significant changes, they adapt to that.

I have built software systems that observe a variety of real world events, learn from them, and act on them.

What are they, kumquats?

No, it’s a description of the behavior of certain software systems.

observation: the action or process of observing something or someone carefully or in order to gain information.

Note second the “or” there. Note also that there are reasoning systems that work to acquire additional information.

encounter: unexpectedly experience or be faced with

I have built software systems that encounter new events and information as part of their normal operation.

This is why operational definitions are essential. Clearly it’s important to you and fifthmonarchyman that human cognition is special and can’t be replicated in software even in principle. You’ve got a ways to go to make that case, though.

Its not, pragmatism is how one chooses, success in practical applications. One could use emotion, revelation, philosophy, tradition,truth, religion. Or like most people a mixture. Pascal’s Wager

Why are you disparaging kumquats? What did they ever do to you?

Human mind fifth, unless you have some reason to think all minds behave the same way.

Humans behave like humans but can imitate machines , a bot doesn’t have behave like a human it just has to be able to imitate one.

A very telling exchange.

Mung: X can’t be done

Patrick: I’ve built X

Mung: What have you got against fruit?

It’s seems Mung has no counter argument but also refuses to acknowledge error. I guess all those science books could not counteract the Creationist in him.

I have previously discussed with FMM the fact that even if a piece of software to all intents and purposes appears to be conscious, he would have no qualms about deleting it simply because he knows it cannot be conscious as it’s “just” software.

So yes, it’s a core conceit that is totally unsupported that I guess makes them feel special.

It is all completely circular.

Computers can’t think because they are not like humans.

Computers are not like humans because they can’t think.

I challenge Mung et all to give an argument as to why computers cannot be conscious that does not apply to us.

Laughable. What does observation mean then Mung? Can you define it in such a way that computer programs cannot do it?

I think that it’s fair to say that that when we say something is “intelligent” or has a mind when we mean is that it thinks somewhat like us so that we could in theory interact with it socially.

Imitating a person and behaving like a person are synonymous.

peace