Recently I described a possible alternative Turing test that looks for certain non-person like behavior. I’d like to try it out and see if it is robust and has any value.

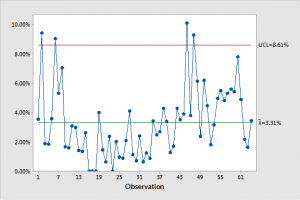

Here is some data represented in an ordinary control chart.

It’s easy to see there is a recognizable pattern here. The line follows a downward trajectory till about observation 16 then it meanders around till about observation 31. At which point it begins an upward track that lasts till almost the end of the chart.

The data in question is real and public but obsolete. I won’t say what it’s source is right now to avoid any bias in your attempts see if we can infer design. I can provide the actual numbers if you like.

The question before the house is. Is the clear overall pattern we see here a record of intention or does it have a “non-mental” cause?

Using the criteria discussed in my “poker” thread I would suggest looking for the following behaviors and excluding a mental cause if they are present.

1) large random spikes in the data

2) sudden changes in the overall pattern of the data that appear to be random

3) long periods of monotony

4) unexplained disjunction in the pattern.

I have some other tests we can look at as well .

What do you say design or not? Are you willing to venture a conjecture?

peace

You still haven’t picked up your game yet. You need to pay attention if you want to participate

If a computer behaved like a person we could assume that it thought like a person. They don’t so we don’t

That is what Turing tests are all about

peace

Why I’m not saying that a compute can’t be a person. I’m saying that a computer is not a person,

If a computer wants to claim to be a person it had better act like one

peace

So why the need for the label? Instead of saying that this is about pragmatism why can’t we just say it’s about choosing?

peace

I’m going to reveal the source of the data soon so now would be a good time to chime in with your opinion. Is the pattern in the chart the result of a mental or non-mental cause ?

There will be praise for getting it right and scorn and mockery for getting it wrong or not venturing a guess 😉

peace

What do you mean by “to all intents and purposes appears to be conscious”

Do you mean that the software in question can’t be distinguished from a person?

If that was the case I would not no how to delete it just as I don’t know how to delete you.

peace

With the exception of mental behaviors that are unpersonlike.

Of course success in a Turing test would show the behavior similar to the point of being indistinguishable from mental behavior could be non mental behavior.

Like ID

Seems unnecessary since you already know that without the math

Just like to know how things work

Probably for the same reason you label yourself a Calvinist

Could you show patterns from minds not human so we could do a Turing test on that assumption?

I disagree , humans behave like humans imitating the appearances of machine.

Not sure I follow to say one is a Calvinist is to say that he holds to 5 points that distinguish us from those Christians who hold to libertarian free will.

You have failed to mention even one thing that distinguishes a pragmatist from a run of the mill chooser

Turing tests are not necessary for this

Chalmers’ zombies have already shown us that non-mental behavior could be indistinguishable from mental behavior.

not “like” ID.

Another approach to ID.

How do you know the pattern in the OP is not from a mind that is not human?

Interesting, Do you think we could we distinguish between

1) behaving like a machine

2) behaving like a human behaving like a machine

Perhaps there is another test in there somewhere

peace

FMM,

You don’t actually know what a Turing test is, do you?

But let me clarify my question.

There is a lever called “delete this software”. When you pull it the software is deleted. You are communicating with the software via a computer, much like we are communicating now.

The point is that no matter how much you were convinced “the software” was conscious, you’d have no qualms pulling the lever. This is a summary of your own position from your own words. If you dispute this I will be happy to find the original exchange.

And you’d have no qualms pulling the lever because consciousness is “special” and reserved for humans, and no mere computer program can achieve consciousness.

If you like you can pretend that you don’t know what “communication” means or some other such diversion to avoid addressing the point. You seem to have forgotten what you’ve written in the past on this. But not to worry, the internet does not forget.

No the point is that when it comes to software I would never be convinced that it was conscious.

What would have to happen is that it would have to convince me that it was not software but a person. That is way it’s called the intimation game

no it’s not reserved for humans. God is not human but he is personal and he is conscious. In fact there are three persons in the Godhead and each are conscious. Angels and Demons would also be nonhuman persons that are conscious.

No I don’t think I have not forgotten.

I’m pretty sure that I would not say anything that would imply consciousness was exclusive to humans. To do that I would have to deny Christianity and I think that I would have remembered that

Perhaps you read something into the conversation that was not there

peace

More evidence that a software system is not and will never be a person

https://www.wired.com/2017/02/self-driving-cars-cant-even-construction-zones/

peace

You said:

If a computer behaved like a person we could assume that it thought like a person. They don’t so we don’t

Translation:

A computer does not behave like a person, so we don’t assume it thinks like a person.

This is the same as the first part of what I said: Computers can’t think because they are not like humans.

So for you disagree with me, you have to disagree with the second part of what I said:

Computers are not like humans because they can’t think.

Do you disagree with that?

I guess this then also shows why 5 year olds are not persons?

Both Neil and I have pointed to what distinguishes a pragmatist

Maybe not but it is the point of Turing Test

Consciousness is equal to mental behavior?

Both rely on determining the probable source of patterns of the elements of a thing.

I don’t until you tell me what it is, care to divulge?

hit their hand with a hammer

Probably best you focus on this test

It’s funny how “evidence” now matters to FMM. What happened to revelation?

Perhaps we should try a different question. Why are you starting from the point of view that a software system is not and will never be a person? How do you know that FMM?

Please don’t wake the bot

That does not follow from the article cited.

Perhaps FMM could explain why that’s evidence for his claim. FMM?

I would say that if a computer could think it would be like a person in that respect.

peace

I have no clue what that is supposed to mean

peace

You link to an article that discusses the problems self-drive cars have with navigating road work obstructions as an illustration of why computers (software systems) are not persons.

Five-year old also can’t navigate roadwork obstructions, so they are also not persons.

I must have missed it. All I remember you saying is that a pragmatist decides according to parameters. All choosers do that

I strongly disagree.

quote:

The Turing test is a test, developed by Alan Turing in 1950, of a machine’s ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human

end quote:

from here

https://en.wikipedia.org/wiki/Turing_test

let’s give it a couple more days

Who’s hand? The person behaving like a machine or the person behaving like a human behaving like a machine?

Peace

You apparently have a jacked up idea of what revelation is.

I have philosophical reasons for believing that a software system or an alarm clock or “evolution” will never be a person. I think that to confuse the two is a category error.

However I’m more than willing to be wrong. That is what the test is all about.

Because persons have minds and software systems do not.

Again I’m willing to be wrong. Chalmers zombies demonstrates that I will never be proven wrong. But it’s possible that you may convince me if your software system can pass the test

peace

It’s not about navigating roadwork obstructions. It’s about dealing with totally new situations that can’t be programed for in advance.

Five-year olds excel at these sorts of things algorithms not so much

peace

Quote:

The answer it seems—at least when it comes to navigating construction zones—isn’t to solve the problem. It’s to sidestep it altogether.

and

Earlier this year, Nissan became the first big player to declare it had no hope of making a car that could handle the whole world on its own. So it plans to use flesh-and-blood humans in remote call centers to guide troubled AVs around confusing situations, like construction zones. The company proposes its operators will use cars’ built-in sensors and cameras to guide vehicles through confusing situations. “We will always need the human in the loop,” Nissan’s Silicon Valley research head Maarten Sierhuis told WIRED in December.

end quote:

peace

That sounds a lot like the guidance parents give to children while they are still learning. Pretty soon, they won’t need it anymore. They grow up. They evolve. It’s the way of things, you know.

Interesting, so what process does a five year old use for dealing with totally new situations? Like you said we aren’t preprogrammed, except by their previous experiences .

What is meant here by algorithms?

Autonomous cars have learning algorithms.

I believe fifth’s hypothesis is that human reasoning uses non algorithmic mental activities and machines and nature use something else. Humans have an innate ability to distinguish the difference.

You seem to be saying the test of personhood is the ability to navigate construction zones in a car.

Look, if all you are trying to say is that computers are different from human beings, there is no disagreement. As to why they are different (apart from biology/technology), now that is where things might get interesting. At the highest level, both computers and humans accept inputs, process data and produce outputs, so from that vantage point there are no real differences.

One level down, looking in more detail at how the data processing takes place, again I don’t think there is much disagreement that computers do this in a different way than humans.

The problems start when you then introduce terms like ‘mind’, ‘person’, ‘thinking’ and so on. At that point we really need proper operational definitions, and they have to be broad enough to allow extrapolation from particular cases to the general. It is of no use to waste a lot of words on labeling a poker computer as ‘mindless’ when ‘mindlessness’ is narrowly defined as the way in which a poker computer plays the game.

This is exactly the same problem we have when defining and testing for ‘intelligence’, and why IQ tests have such limited value.

Then you say: Because persons have minds and software systems do not.

This right here is your problem. You haven’t defined ‘persons’ and you haven’t defined ‘minds’, and I bet that if you try and do this you’ll end up with the simple Venn diagram I mentioned before: the set of human beings who have minds and are persons, and the set of everything else (ignoring hypotheticals like gods and demons etc).

This reflects your worldview, nothing more. You may be right or you may be wrong, but you haven’t left yourself a way to find out.

Now, as to your data example, I think it is absurd to show a couple of dozen data points and suggest that there are simple criteria to decide if these are products of a ‘mind’ or not. First of all you haven’t provided an operational definition of ‘mind’ that we can test the data against. Next, there is massive confusion about what processes are ‘mindless’ and what are not – certainly a problem for a theist who believes God is upholding the world. Finally, this is a ridiculously limited sample set.

Ignoring the labels, this could be a record of snowfall in Northern Lapland (non-mental). Or it could be a record of snowplough activity in Northern Lapland (mental). Or it could be virtually anything else.

Nissan clearly say that cars will never grow up. They will always need humans to navigate anything new that comes along

peace

mental processes. You know the ones we are looking to identify in the test

peace

At least we understand each other. That is an amazing thing given our vastly different starting positions.

peace

no A test (not the one I’m focuses on now) would be the ability to navigate situations that it would be impossible to program for in advance

peace

Why? the entire world functions just fine with out explicit definitions of these things and has for millennia. And there is not an epidemic of folks wanting to marry their operating systems.

I think that developing a precise definition would be highly counterproductive. If you could define it in that way then you could build a algorithm to produce it.

Part of what it means to be a person and a mind is to be beyond that sort of mathematical description.

Find out what?

In case you missed it I’ve proposed a way to “find out” whether a cause is mental or not.

It’s simple and I think pretty rigorous.

Minds behave like minds algorithms not so much

You need to stay tuned. I hope you will be surprised at the source of the data and the relevance of the test

peace

here is a hint about the source of the chart.

https://www.youtube.com/watch?v=S5CjKEFb-sM

God willing I will let the cat out of the bag later today.

peace

Reading the article ,not sure that is what they are saying. It seems Nissan is using this hybrid system ,where the vehicles call for help in certain situations, to speed up deployment of the system. A bridge until the software and hardware catches up.

The world works just fine because the unspoken convention is that minds and thinking are the sole domain of human beings. As long as we all work with that, there won’t be an issue. The moment that we want to investigate if this convention is actually correct, we very much do need to be precise in our terms or we’ll never get anywhere. The discussions on here are a nice illustration of that.

I would call that highly productive, actually!

That is your claim, and what you have to demonstrate. So far you haven’t come close. Unless, of course, you define ‘person’ and ‘mind’ that way – which is the circularity in what you are doing.

Right got it, I was just explaining the meaning of the comment

again quote

Earlier this year, Nissan became the first big player to declare it had no hope of making a car that could handle the whole world on its own. So it plans to use flesh-and-blood humans in remote call centers to guide troubled AVs around confusing situations, like construction zones. The company proposes its operators will use cars’ built-in sensors and cameras to guide vehicles through confusing situations. “We will always need the human in the loop,” Nissan’s Silicon Valley research head Maarten Sierhuis told WIRED in December.

end quote

“always” is a long time. and “no hope” is pretty definitive.

peace

That is not the unspoken convention. The convention is that minds are the sole domain of persons.

It’s only the materialist who would limit it to human beings

Again there is no way to demonstrate if the convention is correct. We know this because of the possibility of philosophical zombies that are indistinguishable from persons but are not conscious.

It’s called the problem of other minds and it will not be solved by defining our terms more precisely

We simply have no way of knowing if any minds besides our own exist at all.

We never have had this ability but we get along just fine

peace

It’s not just me you do the same thing. It’s axiomatic and definitional

I know this because you don’t report it to the authorities when someone switches their computer off.

peace

Wow, we rarely see a poster undermine their own OP as thoroughly as you do here.

Just to remind you, this is what you wrote:

The question before the house is. Is the clear overall pattern we see here a record of intention or does it have a “non-mental” cause?

And now you tell us that we have no way of knowing if other minds besides our own exist at all.

I guess I can answer the question before the house with ‘I don’t know and I never will!’

I understand the premise, the point seems to be elusive ,perhaps by design. If I was forced to guess it would involve detecting the pattern of God. An age old endeavor. Then again it could be something else.

Let me explain: I distinguish computers from human beings for several reasons: computers are built, not born; they are made of metal and plastic, not flesh and blood; computers of today are not self-aware nor do they have emotions. In other words, at this point in time computers are machines. So of course I have no problems with people switching off their computers.

What you are talking about is something else: you claim that it is in principle impossible to ever build a computer that thinks like a human and has human traits like consciousness, awareness, creativity etc. You base this claim on your idea that only human beings (and gods & other hypothetical beings) can have a mind.

That claim is just that, a claim. The onus is on you to provide a justification. Your argument that computers of today can’t ‘think’ is worth as much as the view of a 16th century person that people are not, and never will be, able to fly.

When challenged you retreat into pseudo-philosophical platitudes that even you yourself don’t believe, because they utterly contradict the whole idea of your OP.

Good point, maybe we should look at the paragraph that precedes the quote from wired

It’s a stunning admission, in its way: Nissan’s R&D chief believes the truly driverless car—something many carmakers and tech giants have promised to deliver within five years or fewer—is an unreachable short-term goal. Reality: one; robots: zero. Even a system that could handle 99 percent of driving situations will cause trouble for the company trying to promote, and make money off, the technology. “We will always need the human in the loop,” Sierhuis says.

I watched a YouTube video which pinpointed one difference between city driving and non city driving. It is the ability to break the law, humans have the ability disregard the rules in certain situations. Of course this ability also has catastrophic consequences

How does the test identify mental processes? From what you have said thus far, it’s supposed to go like this:

The experimenter does not attempt to build an algorithm to describe the data.

The experimenter says, “There’s an obvious (?) pattern there and no algorithm to describe the data, therefore there may be a designer with mental processes behind it.”

The former steps qualify as positive proof of design in the data.

Correct me if I’m wrong. And I mean correct me within the framework of the test that you have presented, not some presuppositions or conventions or assumptions or revelation or whatever extraneous.

Sorry ,unhelpful. Your lack of curiosity about the structure of that which you seek to detect is interesting. It reminds me of ID, which has little interest in specifics.